How Quantum Information Theorists Revealed the Relativity Principle at the Foundation of Quantum Mechanics

This Insight is a condensed version of No Preferred Reference Frame at the Foundation of Quantum Mechanics. Reference numbers here correspond to that paper. This Insight is an expanded version of Quantum information theorists produce new ‘understanding’ of quantum mechanics.

Feynman famously said, “I think I can safely say that nobody understands quantum mechanics” [1]. Despite the fact that quantum mechanics “has survived all tests” and “we all know how to use it and apply it to problems,” Gell-Mann agreed with Feynman saying, “we have learned to live with the fact that nobody can understand it” [2]. As a result, there are many programs designed to interpret quantum mechanics (QM), i.e., reveal what QM is telling us about Nature. Per Koberinski & Mueller, we do not have “a constructive account of ontological structure” necessary to constitute a true interpretation of QM … at least not one that is accepted beyond its own advocates [23]. Fuchs notes, “Where present-day quantum-foundation studies have stagnated in the stream of history is not so unlike where the physics of length contraction and time dilation stood before Einstein’s 1905 paper on special relativity” [5]. Concerning that situation, Moylan writes [45]:

The point is that at the end of the nineteenth century, physics was in a terrible state of confusion. Maxwell’s equations were not preserved under the Galilean transformations and most of the Maxwellian physicists of the time were ready to abandon the relativity of motion principle. They adopted a distinguished frame of reference which was the rest frame of the “luminiferous aether,” the medium in which electromagnetic waves propagate and in which Maxwell’s equations and the Lorentz force law have their usual forms. In effect they were ready to uproot Copernicus and reinstate a new form of geocentricism.

Norton notes that even “Einstein was willing to sacrifice the greatest success of 19th-century physics, Maxwell’s theory, seeking to replace it by one conforming to an emission theory of light, as the classical, Galilean kinematics demanded” before realizing that such an emission theory would not work [43]. About that, Einstein wrote [52]:

By and by I despaired of the possibility of discovering the true laws by means of constructive efforts based on known facts. The longer and the more despairingly I tried, the more I came to the conviction that only the discovery of a universal formal principle could lead us to assured results.

Today, 116 years after Einstein’s 1905 paper, we still have no “interpretation” of special relativity (SR), i.e., per Mainwood, “there is no mention in relativity of exactly how clocks slow, or why meter sticks shrink” (no “constructive efforts”). Yet, physicists are not holding conference after conference devoted to understanding SR, as is done for QM [5]. Thus, quantum information theorists have taken Einstein’s lead and sought a “principle” account of QM like that of SR. Concerning the difference between “constructive” and “principle” theories, Einstein writes [50]:

We can distinguish various kinds of theories in physics. Most of them are constructive. They attempt to build up a picture of the more complex phenomena out of the materials of a relatively simple formal scheme from which they start out. [The kinetic theory of gases is an example.] … Along with this most important class of theories there exists a second, which I will call “principle-theories.” These employ the analytic, not the synthetic, method. The elements which form their basis and starting point are not hypothetically constructed but empirically discovered ones, general characteristics of natural processes, principles that give rise to mathematically formulated criteria which the separate processes or the theoretical representations of them have to satisfy. [Thermodynamics is an example.] … The advantages of the constructive theory are completeness, adaptability, and clearness, those of the principle theory are logical perfection and security of the foundations. The theory of relativity belongs to the latter class.

As it turns out, quantum information theorists have discovered a principle account of QM that is every bit the equal of SR. In the first of these “axiomatic reconstructions of QM” based on information-theoretic principles, Hardy shows how one obtains quantum probability theory from classical probability theory by adding the single word “continuous” to one of his five axioms [4]. Koberinski & Mueller write [23]:

We suggest that (continuous) reversibility may be the postulate which comes closest to being a candidate for a glimpse on the genuinely physical kernel of “quantum reality”. Even though Fuchs may want to set a higher threshold for a “glimpse of quantum reality”, this postulate is quite surprising from the point of view of classical physics: when we have a discrete system that can be in a finite number of perfectly distinguishable alternatives, then one would classically expect that reversible evolution must be discrete too. For example, a single bit can only ever be flipped, which is a discrete indivisible operation. Not so in quantum theory: the state ##|0\rangle## of a qubit can be continuously-reversibly “moved over” to the state ##|1\rangle##. For people without knowledge of quantum theory (but of classical information theory), this may appear as surprising or “paradoxical” as Einstein’s light postulate sounds to people without knowledge of relativity.

The principle’s clearest form per Brukner & Zeilinger is, “Information Invariance & Continuity: The total information of one bit is invariant under a continuous change between different complete sets of mutually complementary measurements” [8]. So, how is the information-theoretic principle of Information Invariance & Continuity as compelling as SR’s relativity principle? When applied to Stern-Gerlach (SG) spin measurements, Information Invariance & Continuity essentially extends Einstein’s use of the relativity principle from the boost invariance of measurements of ##c## to include the SO(3) invariance of measurements of ##h## between different reference frames of mutually complementary spin measurements ##J_i = \frac{\hbar}{2}\sigma_i##, where ##\sigma_i## are the Pauli matrices

$$

\sigma_x = \left ( \begin{array}{rr} 0 & \phantom{00}1 \\ 1 & \phantom{0}0 \end{array} \right ), \quad

\sigma_y = \left ( \begin{array}{rr} 0 & \phantom{0}-\textbf{i} \\ \textbf{i} & 0 \end{array} \right ), \quad

\sigma_z =\left ( \begin{array}{rr} 1 & 0 \\ 0 & \phantom{0}-1 \end{array} \right )

$$

That is, the light postulate at the foundation of SR says that observers in different inertial reference frames moving at constant velocity relative to each other all measure the same value ##c## for the speed of light. The principle of Information Invariance & Continuity at the foundation of QM says that observers in different inertial reference frames rotated relative to each other all measure the same value ##h## for Planck’s constant (“Planck postulate”). And, just as the light postulate of SR leads to time dilation and length contraction in a perfectly symmetrical fashion between different reference frames (aka the relativity of simultaneity), the “Planck postulate” of QM leads to “average-only” projection and conservation of spin angular momentum in a perfectly symmetrical fashion between different reference frames (explained below). To see this, let’s start with Hardy’s point about the difference between classical and quantum probability. For that we need to understand the difference between pure and mixed states.

If a photon source produces vertically polarized photons half the time and horizontally polarized photons half the time, then one has a photon beam represented by a mixture of the pure states ##|V\rangle = \left ( \begin{array}{rr} 1 \\ 0\end{array} \right )## and ##|H\rangle = \left ( \begin{array}{rr} 0 \\ 1\end{array} \right )## per the density matrix

$$

\rho = \frac{1}{2}|V\rangle\langle V| + \frac{1}{2}|H\rangle\langle H| = \frac{1}{2}\left ( \begin{array}{rr} 1 & \phantom{00}0 \\ 0 & \phantom{0}1 \end{array} \right )

$$

This is a mixed state. If one sends this beam through a diagonal polarizing filter, the signal intensity (photons per second) will be cut in half because each pure state ##|V\rangle## or ##|H\rangle## will be projected onto the pure state ##|D\rangle = \frac{1}{\sqrt{2}}\left( |V\rangle + |H\rangle \right)##, thereby reducing its intensity by half and polarizing it along the diagonal. Now we have a superposition of pure states, which is a pure state, as opposed to a mixture of pure states. The polarizing filter plus the original mixed state source can be considered a pure state source with the density matrix

$$

\rho = |D\rangle \langle D| = \frac{1}{2}\left ( \begin{array}{rr} 1 & \phantom{00}1 \\ 1 & \phantom{0}1 \end{array} \right )

$$

in the ##|V\rangle##, ##|H\rangle## basis. When a photon beam constructed from the equal superposition of ##|V\rangle## and ##|H\rangle##, i.e., ##\frac{1}{\sqrt{2}}\left( |V\rangle + |H\rangle \right)##, is passed through the diagonal filter, the beam intensity is obviously unaffected. In what follows, our focus will be on pure states.

In the example above, we worked in a 2-dimensional (2D) Hilbert space spanned by ##|V\rangle## and ##|H\rangle## where a general state ##|\psi \rangle## is given by ##|\psi \rangle = c_1|V\rangle + c_2|H\rangle## with ##c_1## and ##c_2## complex and ##|c_1|^2 + |c_2|^2 = 1##. In general, such 2D states are called qubits and the density matrix is given by ##\rho = |\psi\rangle \langle \psi|##. In quantum information theory, these qubits represent an elementary piece of information for quantum systems; a quantum system is probed and one of two possible outcomes obtains, e.g., yes/no, up/down, pass/no pass, etc. The information structure of all such binary systems from an information-theoretic perspective is identical; differences between fundamental systems are only manifested when talking about specific instantiations of this general information-theoretic structure in spacetime. That’s why I will occasionally qualify results as being “in spacetime.”

A general Hermitian measurement operator in 2D Hilbert space has outcomes given by its (real) eigenvalues and can be written ##(\lambda_1)|1\rangle\langle 1| + (\lambda_2)|2\rangle\langle 2|## where ##|1\rangle## and ##|2\rangle## are the eigenstates for the eigenvalues ##\lambda_1## and ##\lambda_2##, respectively. Any such Hermitian matrix ##M## can be expanded in the Pauli matrices plus the identity matrix ##\textbf{I}##

$$

M = m_0 \textbf{I} + m_x\sigma_x + m_y\sigma_y + m_z\sigma_z

$$

where ##(m_0,m_x,m_y,m_z)## are real. The eigenvalues of ##M## are given by

$$

m_0 \pm \sqrt{m_x^2 + m_y^2 + m_z^2}.

$$

We see that ##m_x, m_y, m_z## give two eigenvalues centered about ##m_0##. All measurement operators with the same eigenvalues are related by SU(2) transformations given by some combination of ##e^{\textbf{i}\Theta\sigma_j}##, where ##j = \{x,y,z\}## and ##\Theta## is an angle in Hilbert space, e.g., see Exploring Bell States and Conservation of Spin Angular Momentum. Any density matrix can be expanded in the same fashion

$$

\rho = \frac{1}{2}\left(I + \rho_x\sigma_x + \rho_y\sigma_y + \rho_z\sigma_z \right)

$$

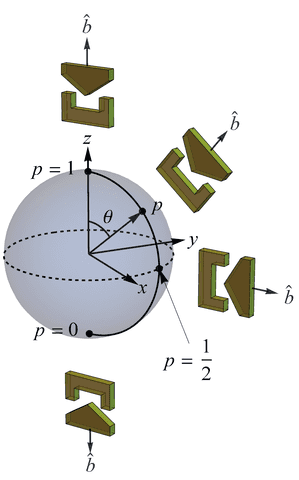

where ##(\rho_x,\rho_y,\rho_z)## are real. The Bloch sphere is defined by ##\rho_x^2 + \rho_y^2 + \rho_z^2 = 1## with pure states residing on the surface of this sphere and mixed states residing inside the sphere, consistent with the fact that mixed states contain less information than pure states (Figure 1). Since I will be referring to spin-##\frac{1}{2}## measurements and states later, I will denote our eigenstates ##|u\rangle## for spin up with eigenvalue ##+1## and ##|d\rangle## for spin down with eigenvalue ##-1##. The transformations relating various pure states on the sphere are continuously reversible (have an inverse) so that in going from a pure state to a pure state one always passes through other pure states. This is distinctly different from the reversibility axiom between pure states for classical probability theory’s fundamental unit of information, the classical bit, as noted above by Koberinski & Mueller [23]. As Hardy pointed out, it is the word “continuously” that makes all the difference [4].

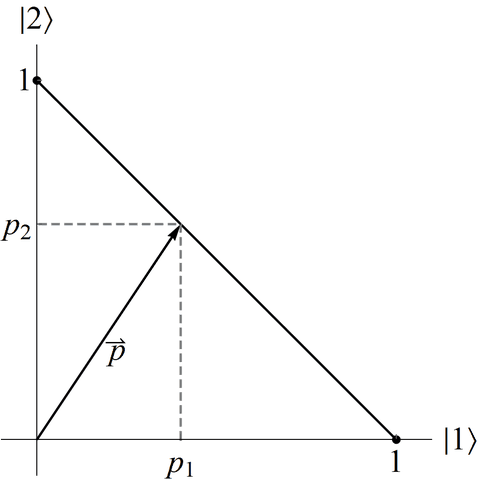

In classical probability theory, the only continuous way to get from one pure discrete state for a classical bit to the other pure state is through mixed states. For example, suppose we place a single ball in one of two boxes labeled 1 and 2 with probabilities ##p_1## and ##p_2##, respectively. The probability state is given by the vector ##\vec{p} = \left ( \begin{array}{c} p_1 \\ p_2\end{array} \right )##. When normalized we have ##\vec{p} = \left ( \begin{array}{c} p_1 \\ 1 – p_1\end{array} \right )## which can be represented by a line segment in the plane connecting ##\left ( \begin{array}{c} 1 \\ 0\end{array} \right )## and ##\left ( \begin{array}{c} 0 \\ 1\end{array} \right )## (Figure 2). We see here that reversibility of pure states is discrete, i.e., accomplished via permutation. As pointed out by Hardy, the reason for this is trivial — a measurement constitutes a projection onto a pure state which in this case means opening one of the two boxes. This is the fundamental difference between classical probability theory and quantum probability theory per their fundamental units of information.

The structure of the qubit is important for two reasons. First, among all generalized bits, the state space of the qubit uniquely maps directly to 3-dimensional real space per ##[\sigma_x, \sigma_y] = \textbf{i}\sigma_z##, cyclic. That’s what is meant by “the reference frame of mutually complementary measurements” (Figure 1). Dakic & Brukner call this the “closeness requirement: the dynamics of a single elementary system can be generated by the invariant interaction between the system and a ‘macroscopic transformation device’ that is itself described within the theory in the macroscopic (classical) limit” [41, 42]. This is due to the fact that the measuring devices used to measure quantum systems are themselves made from quantum systems. For example, the classical magnetic field of an SG magnet is used to measure the spin of spin-##\frac{1}{2}## particles and that classical magnetic field “can be seen as a limit of a large coherent state, where a large number of spin-##\frac{1}{2}## particles are all prepared in the same quantum state.” Second, any higher-dimensional Hermitian matrix with the same eigenvalues can be constructed via SU(2) and the qubit, as explained by Hardy [4]. And from there, one uses ##\otimes## to construct multi-particle configurations. In short, all of denumerable-dimensional QM is built on the qubit in composite fashion. Per Mueller [48]:

… at no point is it assumed that there are wave functions, operators or complex numbers – instead, those arise as consequences of the postulates. And we get all other ingredients and predictions of abstract finite-dimensional quantum: unitary transformations, uncertainty relations, the Schrodinger equation (but not the choice of Hamiltonian or Lagrangian), Tsirelson’s bound on Bell correlations, and more.

Thus, by revealing the relativity principle at the level of the qubit, quantum information theorists have shown that it is as foundational for QM as it is for SR.

The relativity principle states, “The laws of physics must be the same in all inertial reference frames,” aka “no preferred reference frame (NPRF).” In SR, we are concerned with the fact that everyone measures the same speed light ##c##, regardless of their motion relative to the source (light postulate). Here the inertial reference frames are characterized by motion at constant velocity relative to the source and different reference frames are related by Lorentz boosts. Since ##c## is a constant of Nature per Maxwell’s equations, NPRF implies the light postulate [44, 59]. Thus, we see that NPRF + ##c## resides at the foundation of SR. As Norton points out, Maxwell’s discovery of ##c## plus NPRF makes Minkowski space of SR inevitable [43]. Here we will see that Planck’s discovery of ##h## plus NPRF makes the denumerable-dimensional Hilbert space of QM inevitable as well.

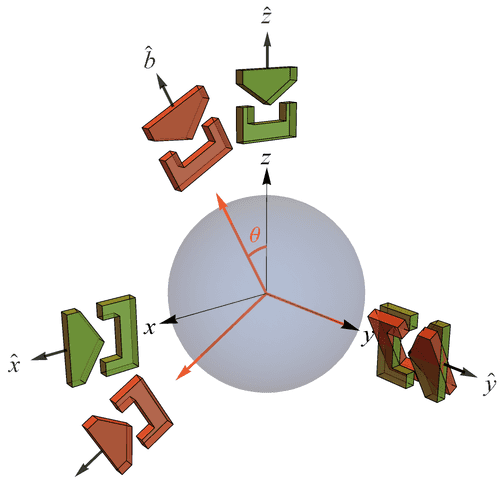

We have seen that different 2D Hilbert space measurement operators with the same outcomes (eigenvalues) are related by SU(2) transformations and that SU(2) transformations in Hilbert space map to SO(3) rotations between different reference frames of mutually complementary measurements in 3-dimensional real space (Information Invariance & Continuity). Since spatial rotations, like Lorentz, boosts, relate inertial reference frames, the information-theoretic qubit structure reveals a role for the relativity principle at the foundation of QM. [Note: While spatial rotations plus Lorentz boosts constitute the restricted Lorentz group, spatial rotations plus Galilean boosts constitute the homogeneous Galilean group, so the use of the relativity principle here does not imply Lorentz invariance.]

Since we have so far only studied the very general structure of the qubit per information-theoretic principles, we don’t as yet have a fundamental constant of nature in play. However, we can already see that the qubit implies a role for the relativity principle (NPRF) in QM. To complete our analogy with SR and its NPRF + ##c##, we need to relate all of this to the fundamental constant of Nature at the foundation of QM, i.e., Planck’s constant ##h##.

Planck introduced ##h## in his explanation of blackbody radiation and we now understand that electromagnetic radiation with frequency ##f## is comprised of indivisible quanta (photons) of energy ##hf##. Classical electromagnetism tells us that the energy density at any location in space where there is an electric field ##\vec{E}## is given by ##\frac{1}{2}\epsilon_o E^2##. If this is the electric field due to monochromatic electromagnetic radiation, then the number of photons per unit volume is simply ##\frac{\epsilon_o E^2}{2hf}##. Obviously, one can then use this to produce the relative probability distribution of photon detection throughout space. Notice the analogy with the Born rule, i.e., one adds all contributing electric field values at each location in space (superposition) to produce ##\vec{E}## then squares ##\vec{E}## to obtain the probability. In fact, this is sometimes used to justify the Born rule heuristically in physics textbooks [44].

One difference between the classical view of a continuous electromagnetic field and the quantum reality of photons is manifested in polarization measurements. According to classical electromagnetism, there is no non-zero lower limit to the energy of polarized electromagnetic radiation that can be transmitted by a polarizing filter. However, given that the radiation is actually composed of indivisible photons, there is a non-zero lower limit to the energy passed by a polarizing filter, i.e., each quantum of energy ##hf## either passes or it doesn’t. Thus, we understand that the classical “expectation” of fractional amounts of quanta can only obtain on average per the quantum reality, so we expect the corresponding quantum theory will be probabilistic. In information-theoretic terms, a system is composed fundamentally of discrete units of finite information (the qubit). Since the qubit contains finite information, it cannot contain enough information to account for the outcomes of every possible measurement done on it. Thus, as argued by Brukner & Zeilinger, a theory of qubits must be probabilistic. Of course, the relationship between classical and quantum mechanics per its expectation values (averages) is another textbook result, e.g., the Ehrenfest theorem.

And, the fact that classical results are obtained from quantum results for ##h \rightarrow 0## is common knowledge. In information-theoretic terms, ##h## represents “a universal limit on how much simultaneous information is accessible to an observer” [17]. For example, ##[X,P] = \textbf{i}\hbar## means there is a trade-off between what one can know simultaneously about the position and momentum of a quantum system. If ##h = 0##, as in classical mechanics, there is no such limit to this simultaneous knowledge. We have ##[J_x,J_y]=\textbf{i}\hbar J_z##, cyclic, so we see that ##h \ne 0##, in this case, corresponds to the existence of a set of mutually complementary spin measurements associated with the reference frame shown in Figure 1.

Given that ##h## is a constant of Nature, NPRF dictates that everyone measure the same value for it (“Planck postulate” in analogy with the light postulate) and as pointed out by Weinberg, the measurement of spin via SG magnets constitutes a measurement of ##h## [60]. Again, the ##\pm 1## eigenvalues of the Pauli matrices correspond to ##\pm \frac{\hbar}{2}## for spin-##\frac{1}{2}## measurement outcomes. Thus, the general result from Information Invariance & Continuity concerning the SO(3) invariance of measurement outcomes for a qubit implies NPRF + ##h## (relativity principle ##\rightarrow## Planck postulate) for QM in total analogy to NPRF + ##c## (relativity principle ##\rightarrow## light postulate) for SR.

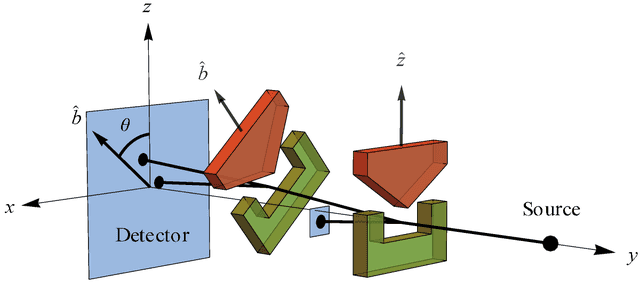

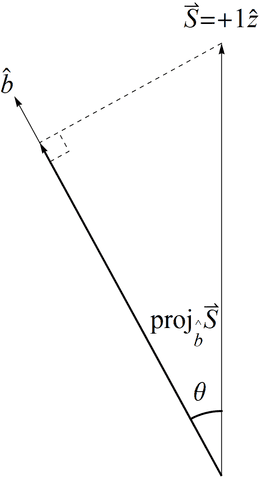

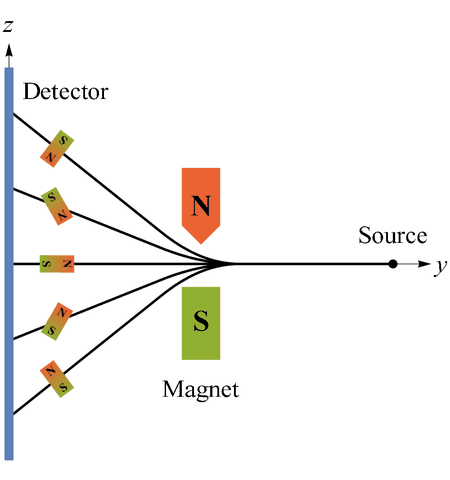

One consequence of the surprising continuously reversible movement of one qubit state to another (to paraphrase Koberinski & Mueller [23]) when referring to an SG spin measurement is “average-only” projection. Suppose we create a preparation state oriented along the positive ##z## axis as in Figure 1, i.e., ##|\psi\rangle = |u\rangle##, so that our “intrinsic” angular momentum is ##\vec{S} = +1\hat{z}## (in units of ##\frac{\hbar}{2} = 1##). Now proceed to make a measurement with the SG magnets oriented at ##\hat{b}## making an angle ##\theta## with respect to ##\hat{z}## (Figure 3). According to the constructive account of classical physics (Figure 6), we expect to measure ##\vec{S}\cdot\hat{b} = \cos{(\theta)}## (Figure 5), but we cannot measure anything other than ##\pm 1## due to NPRF (contra the prediction by classical physics). As a consequence, we can only recover ##\cos{(\theta)}## on average, i.e., NPRF dictates “average-only” projection

\begin{equation}

(+1) P(+1 \mid \theta) + (-1) P(-1 \mid \theta) = \cos (\theta) \label{AvgProjection}

\end{equation}

Of course, this is precisely ##\langle\sigma\rangle## per QM. Eq. (\ref{AvgProjection}) with our normalization condition ##P(+1 \mid \theta) + P(-1 \mid \theta) = 1## then gives

\begin{equation}

P(+1 \mid \theta) = \mbox{cos}^2 \left(\frac{\theta}{2} \right) \label{UPprobability}

\end{equation}

and

\begin{equation}

P(-1 \mid \theta) = \mbox{sin}^2 \left(\frac{\theta}{2} \right) \label{DOWNprobability}

\end{equation}

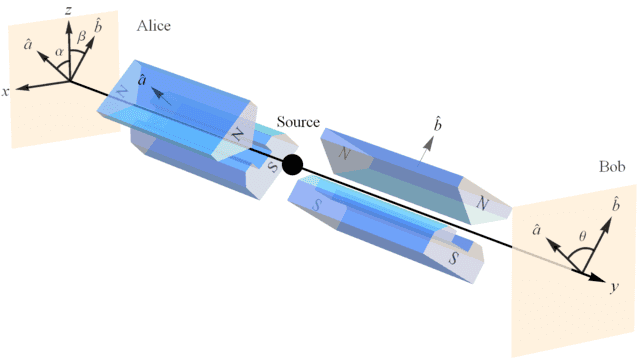

again, precisely in accord with QM. And, if we identify the preparation state ##|\psi\rangle = |u\rangle## at ##\hat{z}## with the reference frame of mutually complementary measurements ##[J_x, J_y, J_z]##, then the SG measurement at ##\hat{b}## constitutes a reference frame of mutually complementary measurements rotated by ##\theta## in real space relative to the preparation state (Figure 4). Thus, “average-only” projection follows from Information Invariance & Continuity when applied to SG measurements in real space.

The fact that one obtains ##\pm 1## outcomes at some SG magnet orientation is not mysterious per se, it can be accounted for by the classical constructive model in Figure 6. The constructive account of the ##\pm 1## outcomes would be one of particles with “intrinsic” angular momenta and therefore “intrinsic” magnetic moments [59] orientated in two opposite directions in space, parallel or anti-parallel to the magnetic field. Given this constructive account of the ##\pm 1## outcomes at this particular SG magnet orientation, we would then expect that the varying orientation of the SG magnetic field with respect to the magnetic moments, created as we rotate our SG magnets, would cause the degree of deflection to vary. Indeed, this is precisely the constructive account that led some physicists to expect all possible deflections for the particles as they passed through the SG magnets, having assumed that these particles would be entering the SG magnetic field with random orientations of their “intrinsic” magnetic moments [66] (Figure 6). But according to this constructive account, if the ##\pm 1## outcomes constitute a measurement of ##h## in accord with the rest of quantum physics, then our rotated orientations would not be giving us the value for ##h## required by quantum physics otherwise. Indeed, a rotation of ##90^\circ## would yield absolutely no deflection at all (akin to measuring the speed of a light wave as zero when moving through the aether at speed ##c##). That would mean our original SG magnet orientation would constitute a preferred frame in violation of the relativity principle, NPRF. Essentially, as Michelson and Morley rotated their interferometer the constructive model predicted they would see a change in the interference pattern [67], but instead they saw no change in the interference pattern in accord with NPRF. Likewise, as Stern and Gerlach rotated their magnets the constructive model predicted they would see a change in the deflection pattern, but instead they saw no change in the deflection pattern in accord with NPRF. And, as I explained in Answering Mermin’s Challenge with the Relativity Principle, “average-only” projection gives “average-only” conservation for the Bell states, which is explained by conservation per NPRF in spacetime (or more generally, conservation per Information Invariance & Continuity). Let me briefly review how that works.

As always, Alice and Bob are making their measurements on each of the two Bell state particles. If Alice makes her spin measurement ##\sigma_1## with her SG magnets oriented in the ##\hat{a}## direction and Bob makes his spin measurement ##\sigma_2## with his SG magnets oriented in the ##\hat{b}## direction, then

\begin{equation}

\begin{aligned}

&\sigma_1 = \hat{a}\cdot\vec{\sigma}=a_x\sigma_x + a_y\sigma_y + a_z\sigma_z \\

&\sigma_2 = \hat{b}\cdot\vec{\sigma}=b_x\sigma_x + b_y\sigma_y + b_z\sigma_z \\ \label{sigmas}

\end{aligned}

\end{equation}

Suppose they are making their measurements on particles in the symmetry plane of a triplet state such that ##\hat{a}\cdot\hat{b} = \cos{(\theta})## (Figure 7). Partition the data according to Alice’s equivalence relation (her ##\pm 1## outcomes) and look at her ##+1## outcomes. Since we know Bob would have also measured ##+1## if ##\theta## had been zero (i.e., if Bob was in the same reference frame), we have exactly the same classical expectation depicted in Figures 3 & 5 for the single-qubit measurement. Thus, again, NPRF dictates that Bob’s results must average to the expected ##\cos{(\theta)}##, so “average-only” projection leads to “average-only” conservation of spin angular momentum. In other words, while spin angular momentum is conserved exactly when Alice and Bob are making measurements in the same reference frame, it is conserved only on average when they are making measurements in different reference frames (related by SO(3) as shown in Figure 4). So, we see that “average-only” conservation of spin angular momentum is a direct consequence of NPRF + ##h##, exactly as length contraction and time dilation are a direct consequence of NPRF + ##c##. And, we could also partition the data according to Bob’s equivalence relation (his ##\pm 1## results), so that it is Bob who claims Alice must average her results to satisfy “average-only” conservation of spin angular momentum. This is totally analogous to the relativity of simultaneity in SR. There, Alice partitions spacetime per her equivalence relation (her surfaces of simultaneity) and says Bob’s meter sticks are short and his clocks run slow, while Bob can say the same thing about Alice’s meter sticks and clocks per his surfaces of simultaneity.

Of course, there is nothing unique about SG spin measurements except they can be considered direct measurements of ##h##. The more general instantiation of the relativity principle per Information Invariance & Continuity applies to any qubit. So, for example, if we are again talking about photons passing or not passing through a polarizing filter, we would have “average-only” transmission for photons instead of “average-only” projection for spin-##\frac{1}{2}## particles, both of which give “average-only” conservation of spin angular momentum between the reference frames of different mutually complementary spin measurements (Figure 4). So, most generally, the information-theoretic principle of Information Invariance & Continuity leads to “average-only” _______ (fill in the blank) giving “average-only” conservation of the measured quantity for its Bell states.

Finally, conservation per NPRF rules out the no-signaling, “super quantum” joint probabilities of Popescu & Rohrlich, as I showed in The Unreasonable Effectiveness of the Popescu-Rohrlich Correlations.

Whether or not one believes principle accounts are explanatory is irrelevant here. No one disputes what the postulates of SR are telling us about Nature, even though there is still today no constructive account of time dilation and length contraction, i.e., there is no “interpretation” of SR. Indeed, every introductory physics textbook introduces SR via the relativity principle and light postulate without qualifying that introduction as somehow lacking an “interpretation.” With few exceptions, physicists have come to accept the principles of SR without worrying about a constructive counterpart. By revealing the relativity principle’s role at the foundation of QM, information-theoretic reconstructions of QM have revealed quite clearly what QM is telling us about Nature to no less an extent than SR. Thus, it is no longer true that “nobody understands quantum mechanics” unless it is also true that nobody understands special relativity. Very few physicists would make that claim.

Figure 1. Probability state space for the qubit ##|u\rangle## in the ##z## basis plotted in real space reference frame of mutually complementary spin measurements, ##J_x, J_y, J_z##.

Figure 2. Probability state space for the classical bit.

Figure 3. In this set up, the first SG magnets (oriented at ##\hat{z}##) are being used to produce an initial state ##|\psi\rangle = |u\rangle## for measurement by the second SG magnets (oriented at ##\hat{b}##).

Figure 4. State-space for a qubit in two reference frames of mutually complementary SG spin measurements.

Figure 5. The “intrinsic” angular momentum of Bob’s particle ##\vec{S}## projected along his measurement direction ##\hat{b}##. This does not happen with spin angular momentum due to NPRF.

Figure 6. The classical constructive model of the Stern-Gerlach (SG) experiment. If the atoms enter with random orientations of their “intrinsic” magnetic moments (due to their “intrinsic” angular momenta), the SG magnets should produce all possible deflections, not just the two that are observed.

Figure 7. Alice and Bob make SG spin measurements on the Bell triple-state ##|\phi_+\rangle## in its symmetry plane.

PhD in general relativity (1987), researching foundations of physics since 1994. Coauthor of “Beyond the Dynamical Universe” (Oxford UP, 2018).

In the other articles, NPRF was applied only to Bell state entanglement. In this Insight, I used the information-theoretic principle of Information Invariance & Continuity from axiomatic reconstructions of QM to show how NPRF resides at the foundation of QM.

I'm glad you found the article useful. It was written so that a physicist in any specialty could follow the argument. The bit about Lx, Ly, and Lz is just an example to show how higher-level Hilbert spaces can be built from the 2-level Hilbert space (qubit) and SU(2). Those specific choices were made to create a set of mutually complementary measurements, but any and all measurement operators with eigenvalues +1, 0, -1 can be made that way using the qubit and SU(2). Bell notes something similar in his famous 1964 paper when he says the 2-level entangled state he discusses could be a subspace of some higher-level system and all of his analysis still follows. The point the QIT people are making is simply that the qubit structure is the basis of denumerable-dimensional Hilbert space in that sense. My example is really a pedagogical tangent :-)

That's not a false claim, it's a difference of opinion between you and @RUTA on what counts as a "constructive" account.

You are now banned from further posting in this thread.

Likewise, the leader of the advocates for a constructive account of SR (Harvey Brown) did not bother to mention either of the theories you mentioned in his PhilSci archive article many years after those papers were published. If those accounts were considered legitimate candidates for a theory of the aether, then they would not be ignored by the community of such advocates for so many years. On the contrary, that community would rally around and champion those theories. Clearly, that community does not believe them worthy. Therefore my statement, "even though there is still today no constructive account of time dilation and length contraction" accurately reflects the state of affairs regarding constructive accounts of SR.

As Peter says, PF is for mainstream physics. Introductory physics textbooks are the gold standard in that regard, and they all present SR via the principle approach and dismiss aether theories. While it's certainly true that aether theories exist, the physics community has clearly written them off at this point. Even Brown doesn't reference the two papers you cited in his 2018 PhilSci Archive paper, "The dynamical approach to spacetime theories," so I certainly would not do so here. My Insight is about quantum information theory and the Topical Group of Quantum Information was officially established as a Division of the American Physical Society in 2017, so the information-theoretic reconstruction of QM is also reasonably "mainstream" now as well. What to tell intro physics students about the Bell inequality, entanglement, EPR, etc., has not been decided by the physics community, as reflected by the absence of those topics in the introductory physics textbooks. Thus, PF moderators have a bit more tolerance for discussions on the foundations of QM. But, the same can't be said regarding aether theories.

Yes, and the way to fix that is to convince the mainstream to stop ignoring them. Complaining about it here does nothing towards accomplishing that objective. It only adds noise to this forum, and we are trying to minimize noise.

It's nothing personal with me. You are advocating a viewpoint that, as you admit, is not mainstream, and PF is not the place to change that. That is what I, as a moderator, am telling you.

It's not just my opinion, it's the general opinion of the moderators. You do not have to agree with the moderators' opinion; but you do need to recognize that it is the moderators' opinion, and that the only thing that is going to change our opinion is if the mainstream becomes convinced regarding the viewpoint you are advocating. Which, as noted above, is not going to happen here at PF; that's not what PF is for.

All of this is off topic here. If you post along these lines again, you will receive a warning.

I did cite Brown & Timpson in this paper and we have cited others who advocate a dynamical account of SR in other papers:

@book{brownbook,

author = {Harvey Brown},

title = {Physical Relativity: Spacetime Structure from a Dynamical Perspective},

publisher = {Oxford University Press},

address = {Oxford, UK},

year = {2005}

}

@incollection{brownpooley2006,

title ="Minkowski Space-Time: A Glorious Non-Entity",

author ="H. Brown and O. Pooley",

booktitle ="The Ontology of Spacetime",

editor ="D. Dieks",

year ="2006",

pages ="67",

publisher ="Elsevier",

address ="Amsterdam"

}

@inbook{brownTimp2006,

publisher={Springer},

location={Berlin},

title={Why special relativity should not be a template for a fundamental reformulation of quantum mechanics},

booktitle={Physical Theory and Its Interpretation: Essays in Honor of Jeffrey Bub},

author={H. Brown and C. Timpson},

editor={W. Demopoulos and I. Pitowsky},

year={2006},

pages={29-41},

note={\url{https://arxiv.org/abs/quant-ph/0601182}}

}

Those authors are cited and acknowledged in foundations of physics. I just haven't seen anything cited or discussed about actual theories of the aether anywhere. Once such a theory reaches that level of recognition in the physics community, I will certainly reference them.

Not only that, but the Schmelzer reference that @Sunil gave has already been discussed years ago on PF, as he very well knows since it has been pointed out to him in other threads.

Has been more than sufficiently discussed in previous PF threads. Rehashing it serves no useful purpose at this point.

Aether theory is not taught nor mentioned in the physics textbooks. Even if it were true, it does not change the postulates of SR nor the result shown in this Insight.

The reason that the harmonic oscillator is ubiquitous in quantum physics (QM and QFT) has to do with another principle altogether, i.e., the boundary of a boundary principle BBP. That’s easy to see in the lattice gauge theory approach to the low-energy approximation of the Klein-Gordon equation. The free-particle Schrodinger equation with its the harmonic oscillator form is the result. You can see that and how it relates to BBP in Section 3 of this paper for example https://ijqf.org/wp-content/uploads/2015/06/IJQF2015v1n3p2.pdf. But that has nothing to do with the topic of this Insight.

They acknowledge that their axiomatic reconstructions are for finite or countably-infinite Hilbert space. That's why I stated specifically, "In short, all of denumerable-dimensional QM is built on the qubit in composite fashion." Doesn't the spectrum of the harmonic oscillator reside in denumerable-dimensional Hilbert space?