How to Better Define Information in Physics

Table of Contents

This article is a survey of information and information-conservation topics in physics. It is intended for an amateur physics audience. In order to make this article suitable for all levels of readers, most of my references are to Professor Leonard Susskind’s video courses, or to Wikipedia.

Start Simple

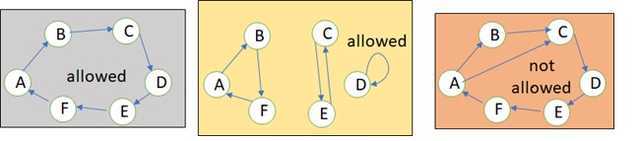

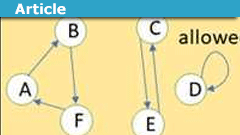

In both his Classical Mechanics[i] and Statistical Mechanics[ii] courses, Susskind begins with simple examples about the allowable laws of physics. Consider a system with 6 possible states, A-F. We are interested in the allowable rules of how the system can evolve. In the blue example below, A goes to B. B to C, C to D, D to E, E to F, F to A, or ABCDEF overall. The yellow example is more complex with cycles ABF CE and D, but they are still allowable. Allowable rules are deterministic, time reversible[iii], and they conserve information. For each of those allowable laws, we could write corresponding equations of motion. What is the information conserved? At any state, we can calculate how we got there (the past) and where we’ll go next (the future) and which cycle (such as yellow ABF) we belong to.

The red example, has two arrows coming out of A, and C has two arrows coming in. Those are not deterministic, not time-reversible, and do not conserve information, and thus are not allowed.

Next, consider a 6-sided die. The die has 6 microstates. Let’s  define I to be the information in this system or the number of microstates. What do we know about the die’s position? Maximum knowledge K occurs when we know exactly which side is up. Minimum knowledge occurs when we have no idea about the position. We can define entropy E, as being proportional to our uncertainty about the position. Because of the way we defined I and E, then we can say trivially that K=I-E or I=K+E. I should add that K could also be called “knowability” or “observability” just to emphasize that there is no need for an intelligent being in physics.

define I to be the information in this system or the number of microstates. What do we know about the die’s position? Maximum knowledge K occurs when we know exactly which side is up. Minimum knowledge occurs when we have no idea about the position. We can define entropy E, as being proportional to our uncertainty about the position. Because of the way we defined I and E, then we can say trivially that K=I-E or I=K+E. I should add that K could also be called “knowability” or “observability” just to emphasize that there is no need for an intelligent being in physics.

First off, we have to be clear about the rather strange way in which, in this theory, the word “information” is used; for it has a special sense which, among other things, must not be confused at all with meaning. It is surprising, but true, from the present viewpoint, two messages, one heavily loaded with meaning, and the other pure nonsense, can be equivalent as regards information. — Warren Weaver, The Mathematics of Communication, 1949 Scientific American.

Those definitions of entropy and that relationship cannot be applied literally in other physics contexts, but they are nevertheless useful as we will see in other contexts. However, there is a familiar everyday life analogy. A 1TB hard drive has the capacity to store 1TB of information, but when it is new or freshly erased, it contains near-zero knowledge.

Consider 4 gas molecules in a box. [I chose the small number 4, to avoid thermodynamics definitions.] The number of possible microstates is proportional to the number of molecules. If the 4 molecules were bunched in one corner then we have better knowledge of their positions than if they are distributed throughout the box. The point is that entropy (and thus knowledge) can vary with the state of the system, whereas the number of microstates (information) does not vary with state.

That leads me to definitions that we can use in the remainder of this article. “Knowledge and Entropy are properties of the system and the state of the system (and possibly external factors too). Information is a property of the system and independent of the state.[iv]” In my opinion, lack of attention to that distinction between information and knowledge is the origin of many misunderstandings regarding information in physics.

Microstates, Macrostates, and Thermodynamics

A definition of information consistent with our simple cases is “the number of microstates.” Susskind says[v] that conservation of information could also be described as conservation of distinction. Distinct states never evolve into more or fewer distinct states.

In thermodynamics, a process can be reversible or irreversible. In thermodynamics, we also have the famous 2nd Law. However, thermodynamics uses macroscopic quantities including temperature. But, in this article, we are discussing microstates, not macrostates, so some thermodynamic concepts and definitions do not apply.

Notwithstanding the above, I can’t resist mentioning that in Boltzmann’s definition[vi], entropy is “a measure of the number of possible microscopic states (or microstates) of a system in thermodynamic equilibrium, consistent with its macroscopic thermodynamic properties (or macrostate).” It then follows that the total number of microstates (information) is greater than or equal to the entropy. For the case with exactly one macrostate, then entropy and information are equal. That is consistent with information as being independent of the (macro)state.

An amusing aside. Susskind calls[vii] Conservation of Information the -1st law of thermodynamics, and the 0th law of thermodynamics is, “If A is in thermal equilibrium with B, and B with C, then A must be in thermal equilibrium with C”, and the more familiar 1st law that we teach students is Conservation of Energy. He emphasizes that information is more fundamental than entropy and energy.

Information in Classical Mechanics

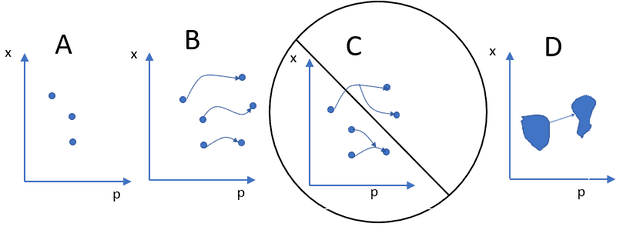

In classical mechanics we learn of phase space; meaning the multidimensional space formed by position x and momentum p degrees of freedom. In A below, we see a depiction of phase space (showing on only one x and one p axis). Each distinct point can be considered a possible microstate as in A. In time, the states can evolve to other places in phase space, as in B. But the trajectories never fork, and never converge (as in C), thus conserving the number of distinct microstates. A, B, and C are the continuous analogs of the discrete evolutions (blue, yellow, red boxes) we started with above.

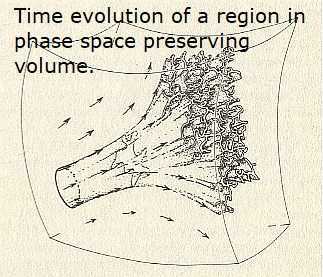

Even better, Liouville’s Theorem[viii] says that if we choose a region in phase space (see D above); it evolves in time to different positions and shapes, but it conserves the volume in phase hyperspace. Liouville’s Theorem is often said to express conservation of information in classical mechanics. Referring to the little picture on the right, drawing the boundaries of the volume determines the system, and thus the information. The shape of the boundary (state) evolves with time. Evolution can be ordered or chaotic.

Liouville’s Theorem[viii] says that if we choose a region in phase space (see D above); it evolves in time to different positions and shapes, but it conserves the volume in phase hyperspace. Liouville’s Theorem is often said to express conservation of information in classical mechanics. Referring to the little picture on the right, drawing the boundaries of the volume determines the system, and thus the information. The shape of the boundary (state) evolves with time. Evolution can be ordered or chaotic.

Is entropy conserved too? In general, no. (again system↔information state↔entropy) However, if we fork the definition of entropy to include coarse versus fine grained entropy, that leads to an interesting side topic.

In classical mechanics we should also consult with “the most beautiful idea in physics”, Noether’s Theorem. That is the theorem that says that every differentiable symmetry of the action of a physical system has a corresponding conservation law.

It would be nice to use Noether’s Theorem to prove a relationship between information conservation and some symmetry of nature. I found one source that says that Noether’s Theorem proves that information conservation is a result of time reversal symmetry[ix]. Another source says that it is not[x].

Alas, time reversal is discrete and non-differentiable, so that won’t work. How about some other symmetry? If ##I ## represents the quantity of information, and if we wish to prove that ##\frac{dI}{dt}=0## using the Principle of Least Action, then the quantity of information ##I ## should appear in the expression for the action. As far as I know, it does not. So, it seems that we can’t use Noether’s Theorem to prove information conservation.

Information in Quantum Mechanics

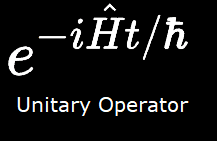

Unitarity  is one of the postulates of Quantum Mechanics[xi]. Unitarity is also said to be the foundation of the conservation of information. (By the way, I exclude from this discussion all interpretations of quantum mechanics.)

is one of the postulates of Quantum Mechanics[xi]. Unitarity is also said to be the foundation of the conservation of information. (By the way, I exclude from this discussion all interpretations of quantum mechanics.)

Unitarity is also said to conserve probability. Huh? So now information is probabilities? These Wikipedia sources[xii] say yes, conservation of probabilities implies conservation of information.

In another context[xiii], Susskind said that if quantum evolutions were not unitary, that the universe would wink out of existence. I believe that what he was referring to is this. If evolutions were sub-unitary, then the probabilities would shrink at each time evolution, until only one microstate remained for the entire universe. If they were super-unitary, the probabilities would increase with each evolution to the point where the identity of particles would be smeared to oblivion. In either case, the universe as we know it could not exist. I interpret all that as saying that the number of microstates (hence information) are conserved in quantum evolution. One might also say it as the system is conserved while the state of the system evolves.

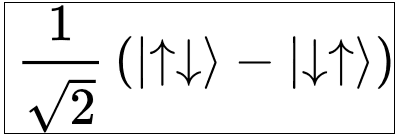

Here’s another example.  Consider a system of two free electrons. Electrons are spin ½ particles, and we can know their spins because there is an observable for a spin. Now consider what happens if the two electrons become fully entangled in the singlet pair state which is spin 0. There is no observable for the singlet to return the spins of the component electrons. This is a time evolution where information is conserved, but knowledge (or knowability) is not conserved. The state changed, but the system remained the same. Remember also that the electrons can also become disentangled thus restoring the original knowledge, while the information of the two-electron system remains invariant throughout.

Consider a system of two free electrons. Electrons are spin ½ particles, and we can know their spins because there is an observable for a spin. Now consider what happens if the two electrons become fully entangled in the singlet pair state which is spin 0. There is no observable for the singlet to return the spins of the component electrons. This is a time evolution where information is conserved, but knowledge (or knowability) is not conserved. The state changed, but the system remained the same. Remember also that the electrons can also become disentangled thus restoring the original knowledge, while the information of the two-electron system remains invariant throughout.

This example illustrates why I prefer to define information and knowledge as distinct things.

By the way, there is also a quantum version of Liouville’s Theorem[xiv] that says (guess what) quantum information is conserved.

Information in Cosmology & General Relativity

Conservation of information at the origin of the universe is the question that first interested me in this topic. I never found an answer to that, and probably never will.

None of Special Relativity, nor General Relativity, nor Cosmology directly address information. However, there is a red-hot issue; the so-called Black Hole Information Paradox[xv].

Stephen [Hawking] claimed that “information is lost in black hole evaporation,” and, worse, he seemed to prove it. If that was true, Gerard [’t Hooft] and I realized, the foundations of our subject were destroyed. —Leonard Susskind[xvi]

By the way, the Wikipedia article about the paradox offers yet another definition for information conservation.

There are two main principles in play:

- Quantum determinism means that given a present wave function, its future changes are uniquely determined by the evolution operator.

- Reversibility refers to the fact that the evolution operator has an inverse, meaning that the past wave functions are similarly unique.

The combination of the two means that information must always be preserved.

This so-called black hole paradox was also the topic of at least 15 PF threads. In my opinion, many of those discussions were spoiled because participants argued about information with differing definitions of the word information in their heads. Nevertheless, the debate continues at levels far above my head. The Wikipedia article summarizes the latest arguments for and against. It also points to another interesting related side topic, The Holographic Principle, which in turn leads to The Limit on information density which leads to The Bekenstein Bound which also talks about limits on information density. Does all this make you feel that you are falling into the rabbit hole? I feel that way.

By the way, I’m delighted that The Bekenstein Bound provides the only example I know of an equation relating information in bits to ordinary physical quantities.

##H ≤ \frac{2\pi cRM}{\hbar ln 2} ≅ 2.5769082 \times 10^{43} \frac{bits}{kg\cdot m}\cdot M \cdot R##

Where H is the Shannon entropy, M is mass in kg, and R is the radius in m of a system.

Utility

Laymen frequently hold the false impression that the purpose of science is the discovery of truth. Actually, scientists more interested in what is useful, and less interested in the truth. Truth can be very philosophical, but usefulness is proved by use.

Unfortunately in physics, information is more interesting than it is useful. If ##I## represents a quantity of information measured in bits (or qubits), how do we calculate it? How do we measure it? I don’t know exactly how many bits it takes to completely describe a photon, or a nucleus, or a lump of coal. The lack of ability to define and measure a numerical quantity for information prevents me from using information with The Principle of Least Action, or doing a before-after information balance on an event such as beta decay.

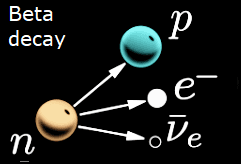

In a beta decay event, neutron decays into a proton ##p##, an electron ##e^-##, and an electron antineutrino ##\bar{\nu}_e##. We know that momentum, energy, and information should all be conserved. Therefore, we can write a momentum balance equation, an energy balance equation, but how about an information balance equation? Suppose we have a function ##I()## that returns the quantity of information of a particle. We should be able to write ##I(n)=I(p)+I(e^-)+I(\bar{\nu}_e)##. Since ##I()## should always be nonzero positive, it also seems conclusive that the information in a neutron must be greater than the information in a proton. But we have no such function I(n). We can’t say how much information is in a neutron, yet we can write equations relating I(n) to other information. Hence, my complaint about information’s lack of utility.

neutron decays into a proton ##p##, an electron ##e^-##, and an electron antineutrino ##\bar{\nu}_e##. We know that momentum, energy, and information should all be conserved. Therefore, we can write a momentum balance equation, an energy balance equation, but how about an information balance equation? Suppose we have a function ##I()## that returns the quantity of information of a particle. We should be able to write ##I(n)=I(p)+I(e^-)+I(\bar{\nu}_e)##. Since ##I()## should always be nonzero positive, it also seems conclusive that the information in a neutron must be greater than the information in a proton. But we have no such function I(n). We can’t say how much information is in a neutron, yet we can write equations relating I(n) to other information. Hence, my complaint about information’s lack of utility.

Information and Causality

Causality is a fundamental principle in physics. There is no theory of causality, nor is it derived from other laws. Causality never appears explicitly in equations. Yet if causality was violated, physics and the universe would be thrown into chaos.

Conservation of Information (COI) is a fundamental principle in physics. There is no theory of COI, nor is it derived from other laws. COI never appears explicitly in equations. Yet if COI was violated, physics and the universe would be thrown into chaos.

Yet the following subjective statements are also true. “Causality means that the cause comes before the effect,” is instantly understood and accepted by almost everyone. “COI means that information is never created or destroyed.,” is instantly misunderstood and challenged by almost everyone.

Conclusion

Let’s summarize. In physics, the word information is closely related to microstates and probabilities. In some limited circumstances information is equal to entropy, but in most cases not. Information should never be confused with knowledge despite what natural language and the dictionary say. And never ever confused with the knowledge of intelligent beings. Despite our inability to quantify information, conservation of information seems firmly established in many contexts. Limits to information density also appear to be well-founded, again despite our inability to quantify it. Information in physics has tantalizing parallels with Shannon Information Theory in communications and computer software, but it is not identical.

On PF, we frequently remind members that energy does not exist by itself in empty space; energy is a property of fields and particles. Should we say the same thing about information, that it is a property of fields and particles? Some people invert that view and speculate that information is the building block of which fields and particles and even reality is built[xvii]. A third view is that there is no such thing as information as a physical quantity, it is just a lingual artifact of our way of speaking. This article brings no clarity to those questions. All we have is hand waving. I blame that on less than useful definitions.

The above notwithstanding, the future sounds bright. Professor Susskind has been touring the country with a series of lectures including ER=EPR in the title[xviii]. He is discussing the research direction of his Stanford Institute for Theoretical Physics. He said that they are looking toward information theory to unite quantum mechanics with general relativity. Of course, it remains to be seen if they will succeed. If they do, Nobel Prizes will surely follow. But for me, their success would hopefully produce one thing even more welcome; namely, a more useful quantitative definition of the word information.

A postscript. Just as I was wrapping up this article, I stumbled across another Susskind quote[xix] that turned everything on its head.

When a physicist, particularly physicists of my particular interest, talks about quantum information, they are usually talking about entanglement. Leonard Susskind.

Ay ay ay; I have a headache.

What is your definition of information in physics? Please contribute it to the discussion thread. But no QM interpretations, please. Also, quantitative definitions please; not examples. Finally, please remember that a bit is a unit, not a definition.

Thanks to member @Dale for his helpful suggestions.

References:

[i] Susskind, Classical Mechanics, Lecture 1

[ii] Susskind, Statistical Mechanics, Lecture 1

[iii] In thermodynamics we have both reversible and irreversible processes. Information depends on microstates. Thermodynamics depends on macrostates, so it does not apply here.

[iv] Susskind, Statistical Mechanics, Lecture 1

[v] Susskind, Classical Mechanics, Lecture 5

[vi] Wikipedia: Boltzmann’s principle

[vii] Susskind, Statistical Mechanics, Lecture 1

[viii] Wikipedia: Liouville’s theorem (Hamiltonian)

[ix] PBS Space Time Why Quantum Information is Never Destroyed

[x] Wikipedia: Symmetry (physics)

[xi] Wikipedia: Mathematical formulation of quantum mechanics

[xii] Wikipedia: Probability Current, Continuity Equation

[xiii] Susskind Book: The Black Hole War: My Battle with Stephen Hawking to Make the World Safe for Quantum Mechanics

[xiv] Wikipdeia: Quantum Liouville equation, Quantum Liouville”, Moyal’s equation, Quantum Liouville equation

[xv] Wikipedia: Black Hole Information Paradox

[xvi] Susskind Book: The Black Hole War: My Battle with Stephen Hawking to Make the World Safe for Quantum Mechanics, page 21.

[xvii] Scientific American: Why information can’t be the basis of reality

[xviii] This Youtube search, returns 3,480 hits on ER=EPR. ER refers to Einstein-Rosen bridge or wormhole, a solution of General Relativity. EPR refers to the Einstein–Podolsky–Rosen paradox regarding quantum entanglement. So ER=EPR is a clever way to suggest the unification of GR and QM.

[xix] Susskind: ER=EPR but Entanglement is Not Enough

Dick Mills is a retired analytical power engineer. Power plant training simulators, power system analysis software, fault-tree analysis, nuclear fuel management, process optimization, power grid operations, and the integration of energy markets into operation software, were his fields. All those things were analytical. None of them were hands-on.

Dick has also been an exterminator, a fire fighter, an airplane and glider pilot, a carney, and an active toastmaster.

During the years 2005-2017. Dick lived and cruised full-time aboard the sailing vessel Tarwathie (see my avatar picture). That was very hands on. During that time, Dick became a student of Leonard Susskind and a physics buff. Dick’s blog (no longer active) is at dickandlibby.blogspot.com, there are more than 2700 articles on that blog relating the the cruising life.

Reached similar conclusions in the thread–https://http://www.physicsforums.com/threads/quantum-measurement-and-entropy.953690/page-2–would like to have your comments.

“Knowledge and Entropy are properties of the system and the state of the system (and possibly external factors too). Information is a property of the system and independent of the state.[iv]”Reference https://www.physicsforums.com/insights/how-to-better-define-information-in-physics/

Definitions are somewhat a matter of taste. It seems strange to define "information" to be distinct from "knowledge". Your general idea is that "information" has to do with the set of possible states of a type of phenomena (e.g. containers of gas) and that "knowledge" has to do with knowing about subsets of states that are actually possible for a particular example of that phenomena (e.g. facts about a particular container of gas or a particular proper subset of possible containers of gas).

By that approach, defining the "information" of a "system" requires defining its states. However we can define the "states" of phenomena in various ways, and the "information" of a system is only defined after "states" are defined.

For example, a system might obey a not 1-to-1 dynamics given by:

a1 -> b1 or b2

a2 -> b1

b1 -> a1

b2 -> a2

for states a1,a2,b1,b2

A person might choose to define (or only be aware of) a set of two states given by

A = {a1,a2}

B = {b1,b2}

and the 1-to-1dynamics in terms of those states is given by

A->B

B->A

So it seems that questions about preserving information depend on someone's knowledge or discretion in defining the states of a system – in the common language sense of the word "knowledge".

Even better, Liouville’s Theorem[viii] says that if we choose a region in phase space (see D above); it evolves in time to different positions and shapes, but it conserves the volume in phase hyperspace.My understanding of Liouvilles Theorem is that it deals with a conserved "density" defined on phase space, not with the concept of "volume" alone. Perhaps "phase space" in physics has definition that involves this density in addition to the ordinary mathematical concept of "volume"?

anorlunda,

Your essay has revived my interest in a subject that has lingered unresolved in my mind for a long time. It has been years since I waded through understanding Shannon’s H-theorem and its resulting bits/symbol metric. His conceptual breakthrough in achieving an actual measure for information completely transformed communication engineering. Regarding other scientific disciplines, I remember it was likened to a horse that was “jumped on and road off in all directions.”

Still unresolved is achieving a clear understanding of the role of information as a conserved property in physical dynamics. To that end, I am uncertain if a definition of information arising from the artifact of human communication engineering is ultimately useful in understanding the nature of physical information. Shannon information and physical information are not the same; they vary significantly in actual energy throughput. Does a binary bit say something about the world or simply say something about what is practical for communication and computing devices? Does it satisfy our need for countable elements in our equations while obscuring a deeper truth?

That’s a bit rhetorical. Clearly, information theory is an extensive and well proven conceptual terrain. To better understand information in physical dynamics, I believe what is needed is a figure and ground shift on the notion of bit. In so far as words will serve, I will try to make that notion more substantive and palatable. Whether this is useful or accurate, it may leaven the discussion.

You note that Susskind equates conserved information with conserved distinction. Clearly the term ‘distinction’ has less baggage than does that of ‘information’ and I would like to use that characterization in the following argument. Here we can make use of the graphic conceptual device “||” to symbolize a distinction between two or more things. Thus:

silk purse||sow’s ear

hot||cold

high||low

x||y

sender||receiver

Physics requires more explicit distinctions along some dimension:

20 cal||80 cal

15 kg||30 g

80ºC||105ºC

If we consider the fundamental schema of classical thermodynamics it could be variously rendered as:

left chamber||right chamber

locomotive boiler||atmosphere

240 p.s.i.||14.7 p.s.i.

While it is effortless and painless to make these distinctions on paper, I have seen photographs of boiler explosions that leveled half-block areas. That is, safely sustaining and utilizing a physical distinction in pressure between 240 p.s.i. and 14.7 p.s.i. requires a material counterpoise of engineered steel plating. This is a general truth. Distinct material states must be effectuated, asserted and sustained within a ceaselessly challenging dynamic.

Let’s recall Gregory Bateson’s characterization of information as “the difference that makes a difference” and take it most literally. This is where the figure and ground shift in our understanding of physical information may occur and it requires a conceptual shift that is not easy to accomplish or work through.

What makes the difference in the example of the locomotive boiler? Where is the information? Is it in the enumeration of the distinct states of the system or in that which actually makes the distinct states of the system? Here we come nose to nose with decades old practice and preconception about the nature of information and its established measure. If we choose the latter, what does that really mean, what’s the benefit and where is the metric?

As to the metric, the hard work is already done because it lies within well-established physics. The general picture is that physical information is at root any means of constraint on energy form. The general rule would be that energy is conserved within changes of form and we would look for information/distinction that is conserved in changes of energy. In the case of the steel plate containment this would involve delving into stress tensors and what persists at fracture. We may find that physical information has its own “alphabet” of immutable distinctions that are deeply rooted and arise from spontaneous symmetry breaking in particle physics at a fundamental level.

As to meaning and benefit, I can only offer hand waving and a sales pitch. I think it would lead to a deeper understanding of the way things work and clarify some features of our physics and ultimately cosmology.

In perhaps overly exuberant summation:

1) I believe there is utility and ultimately broad consistency in considering information as a conserved, active principle in physical dynamics.

2) Information is most usefully viewed as the integral, active counterpoise to energy in physical dynamics.

3) Energy and information are the warp and weft of the physical universe and through their entwinement all structure arises.

4) Only in extremity does energy occur in nature without information as its counterpoise.

5) A consistent metric for this view is possible.

6) Inertial force is the most prominent manifestation of physical information as considered and offers the clearest view of its co-respondent relationship with energy. Their measures are mutually defined in interaction.

7) As such, information forms the narrow point in time’s hour glass, is the co-arbiter of state, puts poise in potential energy, adjusts the small arrows in entropy and is cosmologically fundamental.

I realize this is a big chunk of speculation and I hope it doesn’t set off any alarm bells within the forum. I would be grateful to have a more-or-less forgiving forum to discuss these nascent notions.

Thank you for posting your essay. It was a catalyst. This is the first time I could sketch out these ideas in a sustained if patchy argument. Your broad-ranging, probing essay with its html-tabbed references was a great review of the terrain.

I would certainly appreciate knowing if these ideas have any traction for you.

Regards,

Btw, since you mentioned Professor Lewin in the article: I had the privilege of taking basic EM physics from him when I was at MIT. The lectures were in a huge lecture hall that seated several hundred, with a stage at the front. When we got there for the first lecture, a fair portion of the stage was occupied by a Van de Graaff generator. It sat there for most of the hour; then, at the very end of the lecture, Lewin said he was going to give a simple demonstration of electrostatics: he fired up the generator, climbed onto a chair, touched a rod to the generator, and then touched the rod to his hair (which was still pretty long back then), which immediately stood on end in all directions.

Nice article!

One comment on Noether's Theorem. With regard to energy conservation, Noether's Theorem says that energy conservation is due to time translation symmetry (not reversal). The only role I could see for Noether's Theorem with regard to information conservation would be if there were some well-defined relationship between energy and information, so that if the former were conserved, the latter would also have to be conserved. I can think of some hand-waving arguments that might make that plausible, but I don't know if a rigorous argument along these lines has been formulated.

I appreciated Gregory Bateson’s characterization of information as the, "difference that makes a difference." I believe he was talking substantively, that “make” was an active verb.

Perhaps it leads to one of those philosophical games of golf without the holes, but may we agree that we dwell in an actual physical world whose dynamic structure is created by its enduring, manifested distinctions.

Corn on one side, cows on the other or a Ph gradient within a cell – these distinctions are made to happen and evolve in time. Information is more than the result of our measurement. In the wild it has an amperage and mediates thermodynamic potential.

This is the way I have come to view it and if the text is flat-footedly declarative it is simply a rhetorical stance seeking comment.

Best,

A very interesting topic that can certainly be twisted around from different perspectives! Indeed, i think it is a very general problem in foundational physics to find objective observerindependent measures. And information measurems or probabilitiy measures are certainly at the heart of this. I will refrain from commenting too much as is risks beeing too biased by my own understanding.

You seem to circle around a desire to formulate a conservation law in terms of principle of least action? Then note that we have the Kullback-Leibler divergence (relative entropy, [itex]S_{K-L}[/itex]) wose minimum can be shown to coincide to maximum transition probability. A possible principle is then to minimize information divergence. The statistical weight in case of a simple dice throwing case puts this into a integration weight of [itex]e^{-MS_{K-L}}[/itex]. You find this relation from basic consideration if you consider the probability of dice-sequences. The factor M is then relted to sample sizes. So we get here both measures of "information" and "amount of data" in the formula.

But when you try to apply this to physics, one soon realises that the entire construct(computation) is necessary context or observer dependent. This is why relations between observers, becomes complex. Ultimately i think it boils down to the issue of how to attache probability to observational reality of different observers.

So i think this is an important question, but i think one should not expect a simple universal answer. Two observers will generally not in a simple static way agree of information measurems or probability measures. Instead, their disagreement might be interpreted as the basis for interactions.

Edit: If you like me has been thinking in this direction, note the intriguing structural analogies to inertia. Sample size seem to take the place to mass or energy, and information divergence that of inverse temperature. Then ponder what happens when you enlarge the state space with rate of change, or even the conjugate variables. Then the simple dissipative dynamics turns into something non-trivial – but still governed by the same logic.

/Fredrik

Greg,

Thanks for this article, very useful in clarifying the term information and yes, there is a great deal of ambiguity in its usage. I come at it along a slightly different path but find myself appreciating your exacting definition.

I also find it useful to distinguish between the two broad species of information – the domestic and the feral — the former being the abstraction of physical world distinctions by autonomous systems (like me) for purposes of navigation in its broadest sense and the latter, the feral, as the actual physical distinctions found in the repertoire of the physical world. I hope that makes sense.

I have taken it one step further by elevating information to the role of active player in physical dynamic. Distinctions are not simply notables, they must be declared, made manifest and that is the dynamic role of information. I think it is useful and ultimately more accurate to consider information as the constant, active counterpoise to energy. There is never one without the other.

Be interested in your take on that.

Regards,

Very accessible and very helpful – this is one I will come back to multiple times, @anorlunda .

Wonderful article @anorlunda, a must read!

Nice essay. Another Insight article that made it on my list of standard references.