Plus/minus What? How to Interpret Error Bars

People sometimes find themselves staring at a number with a ± in it when a new physics result is presented. But what does it mean? The aim of this Insight is to give a fast overview of how physicists (and other scientists) tend to present their results in terms of statistics and measurement errors. If we are faced with a value ##m_H = 125.7\pm 0.4## GeV, does that mean that the Higgs mass definitely has to be within the range 125.3 to 126.1 GeV? Is 125.3 GeV as likely as the central value of 125.7 GeV?

Table of Contents

Confidence Levels

When performing statistical analysis of an experiment there are two possible probability interpretations. We will here only deal with the one most common in high-energy physics, called the frequentist interpretation. This interpretation answers questions regarding how likely a certain outcome was given some underlying assumption, such as a physics model. This is quantified by quoting with which frequency we would obtain the outcome, or a more extreme one if we repeated the experiment an infinite number of times.

Naturally, we cannot perform any experiment an infinite, or even a large enough, number of times, which is why this is generally inferred through assumptions on the distribution of the outcomes or by numerical simulation. For each outcome, the frequency with which it, or a more extreme outcome, would occur is called the “p-value” of the outcome.

When an experiment is performed and a particular outcome has occurred, we can use the p-value to infer the “confidence level” (CL) at which the underlying hypothesis can be ruled out. The CL is given by one minus the p-value of the outcome that occurred, i.e., if we have a hypothesis where the outcome from our experiment is among the 5% most extreme ones, we would say that the hypothesis is ruled out at the 100%-5% = 95% CL.

Sigmas

Another commonly encountered nomenclature is that of using a number of σ. This is really not much different from the CL introduced above and is simply a way to referring the CL at which an outcome would be ruled out if the distribution was Gaussian and it was a given number of standard deviations away from the mean. The following list summarises the confidence levels associated with the most common numbers of sigmas:

1σ = 68.27% CL

2σ = 95.45% CL

3σ = 99.73% CL

5σ = 99.999943% CL

In particle physics, the confidence levels of 3 and 5σ have a special standing. If the hypothesis that a particle does not exist can be ruled out at 3 sigma, we refer to the outcome as “evidence” for the existence of the particle, while if it can be ruled out at 5σ, we refer to it as a “discovery” of the particle. Therefore, when we say that we have discovered a particle, what is really being implied is that, if the particle did not exist, the experimental outcome would only happen by chance in 0.000057% of the experiments if we repeated the experiment an infinite number of times. This means that if you could perform an experiment every day, it would take you on average about 5000 years to get such an extreme result by chance.

It is worth noting that this interpretation of probabilities makes no attempt to quantify how likely it is that the hypothesis is true or false, but only refers to how likely the outcomes are if it is true. Many physicists get this wrong too! You may often hear statements such as “we are 99.999943% certain that the new particle exists”, such statements will generally not be accurate and rather belong to the other interpretation of probability, which we will not cover here.

Error Bars

So what does all of this have to do with the ± we talked about in the beginning when discussing the errors in some parameter? Unless otherwise specified, the quoted errors generally refer to the errors at 1σ, i.e., 68.27% CL. What this means is that all of the values outside the error bars are excluded at the 1σ level or stronger. Consequently, all the values inside the error bar are not excluded at this confidence level, meaning that the observed outcome was among the 68.27% less extreme ones for those values of the parameter.

This also means that the error bars are not sharp cutoffs, a value just outside the error bars will generally not be excluded at a level much stronger than 1σ and a value just inside the error bars will generally be excluded at almost 1σ.

The last observation we will make is that the confidence level of a given interval may not be interpreted as the probability that the parameters are actually in that interval. Again, this is a question that is not treated by frequentist statistics. You may therefore not say that the true value of the parameter will be within the 1σ confidence interval with a probability of 68.27%. The confidence interval is only a means of telling you for which values of the parameter the outcome was not very unlikely. To conclude, let us return to the statement ##m_H = 125.7\pm 0.4## GeV and interpret its meaning:

“If the Higgs mass is between 125.3 and 126.1 GeV, then what we have observed so far is among the 68.27% less extreme results. If the Higgs mass is outside of the interval, it is among the 31.73% more extreme results.”

Professor in theoretical astroparticle physics. He did his thesis on phenomenological neutrino physics and is currently also working with different aspects of dark matter as well as physics beyond the Standard Model. Author of “Mathematical Methods for Physics and Engineering” (see Insight “The Birth of a Textbook”). A member at Physics Forums since 2014.

They don't differ significantly true, Taking in the whole picture I would say there is one invariant mass value but I would think the statistical uncertainly was significantly larger than 0.2 GeV.Well, the calculations show it is just 0.2 GeV (and 0.1 GeV systematics). It is hard to argue against mathematical formulas.

The two decay modes don't differ. For ATLAS the best fit in the photon channel leads to a slightly higher mass, for CMS the 4 lepton channel leads to a slightly higher mass. There is no way two particles would lead to that – they cannot decay exclusively to different channels based on which detector they are in.They don't differ significantly true, Taking in the whole picture I would say there is one invariant mass value but I would think the statistical uncertainly was significantly larger than 0.2 GeV. The ATLAS data differ by 2σ. ( 1.5 GeV ) and the CMS data differ by about 1σ (0.9 GeV).

The γγ channel gives an average value of 124.6 GeV and the 4l channel gives 125.8 GeV. each with a σ of about 1 GeV. The final quoted uncertainly of 0.2 GeV seem a little optimistic to me. In fact if one just takes the four values of the mass and computes a standard deviation you get 0.4 GeV.

Figure 4 summarizes it nicely. The best fit combination is in three of the four 68% confidence intervals, and the fourth one is close to it as well. This is what you expect as statistical fluctuation. Maybe a tiny bit more fluctuation than expected, but certainly in the normal range.

The two decay modes don't differ. For ATLAS the best fit in the photon channel leads to a slightly higher mass, for CMS the 4 lepton channel leads to a slightly higher mass. There is no way two particles would lead to that – they cannot decay exclusively to different channels based on which detector they are in.

I certainly hope no one would claim to have found two different levels if the 68% confidence intervals overlap. That is not 2 sigma deviation, by the way, it is only sqrt(2) (assuming Gaussian uncertainties).

You cannot infer the errors on the Higgs mass simply by looking at the number of events. The spectral shape of the event distribution is crucial as the invariant mass of the photon pair in the γγgammagamma channel is the Higgs mass.Yes I understand that , but the extracted number of events from the experiment is spread out over a background with flucturations of the order of the number of events that are identified. The paper says several hundred event recorded with a S/N of 2%. Depending on the distribution of events in particular how much they are spread out will make the results of the fit more or less significant.

In the Higgs to photon channel, the resolution is about 2 GeV, so the experiments see a falling background together with a peak with a width of about 4 GeV (http://www.atlas.ch/photos/atlas_photos/selected-photos/plots/fig_02.png [Broken], CMS). The statistical uncertainty is then given by the uncertainty of the fitted peak position, which is smaller than 4 GeV – the more significant the peak the smaller the uncertainty relative to the peak width. No magic involved.Yes I appreciate that. But again the Atlas and CMS data agree for the respective decays pretty well but the results are almost 2σ different between the decay modes? And the final estimate is quoted at ± 0.2 GeV even though the difference in the estimated mass from the two decay mode differ by 1.5 GeV.

If the data where representative of some other phenomena say nuclear energy levels observed from two different reactions, in which only one level was excited in a given reaction, the difference in the fitted peak values differing by 2σ would be large enough to claim , after properly accounting for systematic errors, that they were probably two different levels,

Admittedly their data analysis is complex and I do not fully understand it but based on my own experience albeit not with as a complex experiment my eyebrows remain raised.

It might be instructive to have an Insights presentation on the determination of a net statistical error for quantities calculated from the non linear fitting of data.

Even 1 event would allow a precise mass estimate if (a) there would be no background and (b) the detector resolution would be perfect.

In the Higgs to photon channel, the resolution is about 2 GeV, so the experiments see a falling background together with a peak with a width of about 4 GeV (http://www.atlas.ch/photos/atlas_photos/selected-photos/plots/fig_02.png [Broken], CMS). The statistical uncertainty is then given by the uncertainty of the fitted peak position, which is smaller than 4 GeV – the more significant the peak the smaller the uncertainty relative to the peak width. No magic involved.

Getting the systematics down to the level of .1% is much harder.

You cannot infer the errors on the Higgs mass simply by looking at the number of events. The spectral shape of the event distribution is crucial as the invariant mass of the photon pair in the ##gammagamma## channel is the Higgs mass. Of course, more events give you better resolution for where the peak actually is and if you had a horrible signal-to-background you would not see anything, but it does not translate by simple event counts.

Yes I had a mental slip there but still the SM did guide them in selecting event by predicting the branching fractions The final statistical error is 0.16% which for a statistically dominated study seems outstanding. I do not intend the continued comments below to be a micurating contest. I have done some extractions of parameters from nuclear spectra by fitting an expected distribution. I cannot easily, if at all, tease out the details of their analysis so bear with me.

The paper says:

"The H → γγ channel is characterized by a narrow resonant signal peak containing several hundred events per experiment above a large falling continuum background. The overall signal-tobackground ratio is a few percent."

That's say 300 total events in the peak on a background with a statistical variation of 1σ of about 100 events.

I also says:

"The H → ZZ → 4` channel yields only a few tens of signal events per experiment, but has very little background, resulting in a signal-to-background ratio larger than 1. "

And this is about 30 events in the "peak" on a background with a statistical variation 1σ of about 3 events.

I do not know how the events are distributed i.e. how many channel are use to define an event but I would expect the scatter in a channel with these number of total event to be quite significant translating to a rather large possible variation of the fitting parameters.

Interestingly, at least to me, is the fact that the Atlas and CMS data agree for the respective decays pretty well but the results are almost 2σ different between the decay modes? And the final estimate is quoted at 0.2GeV ± 0.2 GeV but the 68% CL in fig 4 shows ± 0.3 GeV even though the difference in the estimated mass from the two decay mode differ by 1.5 GeV.

Maybe I am just quibbling about a few tenths of a GeV in the quoted uncertainty which is probably not significant. But this type of statistical analysis is much more complex than the biological sciences need for usual population studies which are typically performed.

data produce mostly non overlapping resultsIf we assume that the global best fit value is the actual Higgs mass, then you would expect the 68% regions to include it 68% of the times. In this case, the best fit is inside 3 out of 4 of the confidence regions, i.e., 75%. Not exactly 68%, but that is difficult to get unless you perform a very large number of experiments. Remember what I said in the insight text itself: If you draw a region at the x% CL, then it will include the actual value in x% of the experiments and it will not include it in 100%-x of the experiments. Also, 68% is not a very high confidence level. With that data at hand, I would not suggest raising an eyebrow.

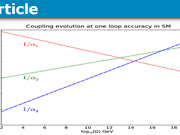

I wonder what value they would have stated (and uncertainty) if they did not know what was the mass of the HB the Standard Model predicted.The Standard Model does not predict the Higgs mass. It is a free parameter in the theory.

One striking difference between particle physics and biological science use of statistics is that in the Biological experiment events seem easier to identify i.e. an events satisfies some relatively easily stated criteria so an event goes into one group or another.

In the Higgs Boson paper ( which I only skimmed) I find the identification of events (invariant mass) very complex. My only observation that raises my eyebrow is fig 4 which shows a summary of the data and analysis, where the four sources of data produce mostly non overlapping results at the 67% CL. with the results of the combined experiments and 67% CL encompassing only extreme values of the individual sources. Seems optimistic but I am certainly not qualified to judge their methods.

I wonder what value they would have stated (and uncertainty) if they did not know what was the mass of the HB the Standard Model predicted.

Nice post!

Unlike the biological sciences Physics experiments are not usually dominated by statistical uncertainties.Most analyses in particle physics are limited by statistics. The Higgs mass measurement is one of them – the statistic uncertainty is two times the systematic uncertainty in the most recent combination.

It might be instructive to show the type of data that is used to get the result mH=125.7±0.4 GeV, Unlike the biological sciences Physics experiments are not usually dominated by statistical uncertainties.

When looking into it, it turned out that the statistical material available to the seminal paper was not sufficient to even claim a positive correlation within the linear model used (and on top of it the model provided an awful best fit — the one given with four significant digits).Unfortunately that is quite common. I review a lot of papers with really basic statistical mistakes. It probably did not even occur to the authors of the seminal paper that they should put a confidence interval around their fit parameter.

I think that medical researchers would do better at understanding Bayesian statistics in terms of what they mean, but it will be quite some time before those methods are developed enough to be "routine" enough for them to use.

I agree 100%. I work mostly in the medical literature. I think that they are less careful about such things, and it always irritates me.I do understand what you mean. My mother is a medical doctor and was writing a paper in the beginning of the year and their experimental result was in conflict with a seminal paper in the field which was used to advice patients due to a claimed linear correlation between two quantities (given with four significant digits!), so she asked for some advice. When looking into it, it turned out that the statistical material available to the seminal paper was not sufficient to even claim a positive correlation within the linear model used (and on top of it the model provided an awful best fit — the one given with four significant digits). This is what happens when you just look at your data and want to see a line.

Of course, it is up to the authors to make sure that what is shown cannot be misinterpreted.I agree 100%. I work mostly in the medical literature. I think that they are less careful about such things, and it always irritates me.

Sometimes a plot with an error bar just shows a mean and standard deviation. Would you still interpret those as confidence intervals rather than descriptive statistics?No. If you have a family of measurements (essentially repeated experiments) it is something different. Of course, it is up to the authors to make sure that what is shown cannot be misinterpreted. Of course, from the data given and a model of how data relates to the model parameters, you could do parameter estimation with the data and obtain confidence intervals for the parameters. With such a collection of measurements, the descriptive statistic which is the standard deviation will generally not encompass any systematic error made in the measurement. In HEP, it is most often the case that you do not have several measurements of the same quantity, rather you are concerned with binned (or unbinned) energy spectra (i.e., a number of events per bin, or a number of events with associated energies). Error bars in such plots will generally contain both the statistical uncertainty as well as any systematic uncertainties.

Good overview of confidence intervals.

Sometimes a plot with an error bar just shows a mean and standard deviation. Would you still interpret those as confidence intervals rather than descriptive statistics?

I think you should clarify the point that there are two distinct types of confidence intervals in frequentist statistics.While I understand your concern, my main aim was to give an as short as possible overview of what people should take away from a number quote from a physics experiment and not to be strictly correct in a mathematical statistics sense. Far too many times I have seen people drawing the conclusion that 0 is impossible because the experiment said 1.1±1.0.

Nice first Insight Oro!

I think you should clarify the point that there are two distinct types of confidence intervals in frequentist statistics. The kind of confidence interval treated by statistical theory is an interval defined with respect to a fixed but unknown population parameter such as the unknown population mean. This type of interval can have a known numerical width but it does not have known numerical endpoints since the population parameter is unknown. For this type interval, we can state a probability (e.g. .6827) that the true population parameter is within it – we just don't know where the interval is!The second type of confidence interval is an interval stated with respect to an observed or estimated value of a population parameter, such as 125.7. (Some statistics textbooks say that is "an abuse of language" to call such an interval a "confidence interval".)As you point out, the interpretation of a "confidence interval" with numerical endpoints is best done by putting the scenario for statistical confidence intervals out of one's mind and replacing it by the scenario for hypothesis testing.