Scientific Inference: Logical Induction Ain’t Logical

Click for Full Series

Three million years ago, the grandfathers of our genus used sharpened stones to chop wood and cut bone. Today, surgeons use fiber optic-guided lasers to clear microblockages in the heart. Within our own lives, we’ve gone from burning our mouths on hot soup as children to cautiously blowing on the first spoonful as adults. These are examples of learning, of individuals and species adapting their behaviors and modifying their worlds in response to life experience. That we have learned is undeniable, but 300 years ago David Hume argued convincingly that this process of learning is not logically founded, that no formal proof of its effectiveness exists. What then of humanity’s most prized system of inquiry, the scientific method? Most of us are convinced that astronomy gets closer to the true nature of things than astrology, but Hume’s argument not only blurs the distinction, it claims that no such comparison can be made. To the practicing scientist who is primarily concerned with collecting data, publishing papers, going to great locales for conferences, and so on, these are mere academic concerns to trouble only philosophers; after all, scientists are just driving the car, they don’t care about what’s buzzing under the hood. But Hume’s challenge is a deep stab at the heart of the scientific enterprise that flies in the face of basic experience, one that has resisted centuries of concerted effort at finding a resolution. Even if we don’t care about the formalities, in exploring this problem we’ll come to better understand the practice of science and how we come to know stuff.

Part 1. “Logical induction ain’t logical” – David Hume

Most of us are familiar with logical arguments like:

1. All priests are men.

2. Father Jim is a priest.

_____________________

therefore Father Jim is a man.

This is an example of a logical deduction: the truth of the conclusion is guaranteed by the truth of the premises so long as we follow the rules of deductive logic. The thing about deduction is that the conclusions never tell us anything new, since they are always fully entailed, if only implicitly, by the premises. In the example above, the fact that Father Jim is a man is already contained within the two premises—the conclusion merely makes the statement explicit. Because deductions never tell us anything new, they cannot increase, or amplify, our knowledge—they are said to be non-ampliative. How is it that we know the premises are true? Because, among other things, being a man is a necessary requirement for being a priest—this deduction is true by definition.

What about this:

The sun has risen on Earth

every day for the past 4.5 billion years.

_________________________

therefore the sun will rise tomorrow.

Here the premise is true—it is based on historical knowledge—but the conclusion, which refers to the future, does not follow. Regardless of how certain we feel, the conclusion is not guaranteed because it contains information not provided in the premises. This kind of inference, in which the premises do not necessarily entail the conclusion, is called induction. You can think of it as the less-certain cousin of deduction. The above conclusion is tantamount to saying that the Sun will rise everyday, because there’s nothing in the premises that singles out “today” as particularly special. This is the essence of induction: from the observation of individual particular cases we project universal generalizations. In the case of the rising sun, we have many, many individual observations and so we might suspect, though maybe not logically guaranteed, that our future projection is solid.

But what about this:

5 jellybeans, all of which have been black,

have been selected from a jar containing 100 jellybeans.

________________________________

therefore all jellybeans in the jar are black.

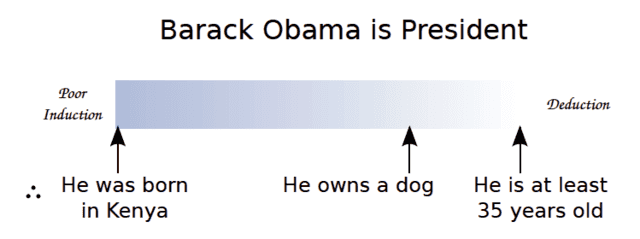

We feel less sure of this conclusion because there seem to be too few observed cases to speculate with much confidence about the larger collection of jellybeans. Inductive reasoning spans the spectrum of inference: from deduction (truth by logical necessity), to the tenuous assertion, to the most egregious non-sequiturs (see Figure 1). Ideally, our inferences should be inductively strong, as close to the deductive end of the scale as possible.

Fig 1. The sliding scale of induction, from poor inductive reasoning to the deduction.

Strong inductive arguments are ones in which the conclusions follow from the premises with high probability, like the case of the rising sun. Sometimes the conclusion forms the basis of a hypothesis—a more general statement or principle that includes the premises as particular cases. This generality is achieved through inductive reasoning by extrapolating particular observations across time, space, or jellybeans in jars. The problem is that we have no way of knowing how reliable it is.

Consider the following:

Induction has worked reasonably well in the past

________________________________________

therefore induction will work reasonably well in the future.

This is an inductive argument concerning induction itself. Assuming the premise is true, we expect the conclusion, that induction will continue to work reasonably well in the future, to be true. Seem circular? It is, badly so. This is the problem of induction. Hume nailed it in 1748 [1]

“…there can be no demonstrative arguments to prove, that those instances, of which we have no experience, resemble those, of which we have had experience…even after the observation of the frequent or constant conjunction of objects, we have no reason to draw any inference concerning any object beyond those of which we have had experience.”

Hume’s challenge is that the success of induction, arguably the method of establishing natural law since the Enlightenment, cannot be formally demonstrated. Philosopher Willard V. Quine offers a 20th-century view: “The brute irrationality of our sense of similarity, its irrelevance to anything in logic and mathematics, offers little reason to expect that this sense is somehow in tune with the world…Why induction should be trusted, apart from special cases such as the ostensive learning of words, is the perennial philosophical problem of induction.” [5]

The solution is at least intuitively obvious: there must be some fundamental uniformity within nature on which to anchor our extrapolations. But there are so many ways that nature might appear uniform: so many true regularities, so many perceived but unrelated correlations, so many random occurrences. Unless the requisite uniformity exists a priori (a likely untenable proposition, regardless of what Herr Kant might think), it must be established empirically—a feat that necessarily involves induction, taking us right back to the start. There have been many contemporary attempts to solve Hume’s problem (see, for example, [2] and [3]), but all are inextricably self-referential and question-begging; all ultimately fail. This is not surprising, because there can be no resolution to the problem in general. How do we know whether an inductive argument is likely correct? We don’t—we can’t—because the conclusion is not based on experience nor a logical consequence of it. Following philosopher Nelson Goodman we adopt the view that Hume’s problem is “not a problem of demonstration, but a problem of defining the difference between valid and invalid predictions” [4] (emphasis mine), that is, the method of induction must be taken as given: the challenge is the more practical one of knowing when we can and cannot apply it.

To illustrate this point, say a die is rolled one million times with each face coming up very nearly 1/6 of the time. We are pretty confident that the die is fair, and if asked to bet on whether the next two rolls will be sixes, we’d bet odds 36-to-1 against. And if the existence of the gambling industry is any proof, we’d be substantiated in this judgment. The roll of the die is a relatively simple phenomenon with readily recognizable symmetry—we can apply induction with high confidence. Meanwhile, Hume’s concern seems quite immaterial, amounting to the warning that the universe might one day change, abruptly, in such a way that those odds shift, that the die roll no longer conforms to the same long-running averages. This is obviously impossible to guard against—the problem of induction is not about our troubles in dealing with a potentially arbitrary and capricious universe. The problem is instead one of how to isolate the regularities relevant to the system under investigation in the face of incomplete knowledge. We cannot prescribe rules for how to do this in general (echoing Goodman, the problem is not one of demonstration), but the validity of individual hypotheses about the world can be decided from within the system of inductive inference.

Because the uniformity emerges clearly after many trials, the roll of the die is a simple and notable success story. Not all problems are this easy. Suppose we are looking for a mathematical expression governing a certain physical process. As data we have the numbers [itex]1, 3, 5, 7, 9, \cdots[/itex]. The sequence is generated by the expression [itex]x_n = 2n+1[/itex], starting from [itex]n=0[/itex], and our prediction for the [itex]6^{\rm th}[/itex] number is [itex]x_5 = 11[/itex]. Meanwhile, a rival team of researchers advances the prediction [itex]x_5 = 3845[/itex], based on the relation

\begin{equation}

x_n = (1 – 2n-1)(3 – 2n-1)(5-2n-1)(7-2n-1)(9-2n-1)+2n+1.

\end{equation}

Both sequences describe the regularity of the data equally well, but they make wildly different extrapolations beyond them. We can think of the alternative sequences as two competing hypotheses, and the challenge is to select the one that best generalizes the given data.

Nelson Goodman found an insightful way of articulating this problem. All emeralds that have ever been observed have been green. Suppose there is some other disposition of color called “grue”, such that something that is grue is green before, say, July 1, 2100, and blue afterward. All emeralds that have ever been observed have also then been grue. Now, which color do we predict for yet to be observed emeralds? If we project green we will be correct, whereas a projection of grue will throw us a curveball—it will not support a strong inductive inference once the calendar ticks past July 1, 2100. This example is deliberately artificial, but Goodman’s point is an important one: there is no clear way to differentiate projectable properties, like green, from non-projectable ones, like grue. Goodman suggests past experience as a guide: “Plainly, ‘green’, as a veteran of earlier and many more projections than ‘grue’, has the more impressive biography. The predicate ‘green’, we may say, is much better entrenched than the predicate “grue” [4]. So while there is no hard rule for distinguishing projectable from non-projectable characteristics, we expect that our acquaintance with the world should inspire our ability to tease out the essential characteristics of the system under study. Not foolproof though, and not always helpful: for example, experience doesn’t suggest which expression for [itex]x_n[/itex] above is projectable. The problem of projectability is pervasive and formidable: Goodman named it the “new riddle of induction”.

The struggle against Hume’s problem of induction has moved from the philosophical high ground to the front lines of applied science. We have passed on solving the meta-problem of induction as a method, focusing instead on our ability to discern projectable patterns in the data logbook in support of strong inductive inference. In science, we are given an incomplete glimpse of a small part of the world, and it’s easy to suppose that induction, however imperfect, is the only way to complete the picture, but this view is not without its challengers. Let’s put induction on hold for a while, and investigate some other approaches to the problem of scientific inference.

Continue to: Part 2. “We don’t need no stinkin’ induction” – Sir Karl Popper

References

[1] An Enquiry Concerning Human Understanding, David Hume (1748).

[2] Choice & Chance: An Introduction to Inductive Logic, Brian Skyrms, Dickenson Pub. Co. (1986).

[3] The Foundations of Scientific Inference, Wesley Salmon, Univ. of Pittsburgh Press (1979).

[4] Fact, Fiction, and Forecast, Nelson Goodman, Harvard University Press (1954)

[5] Natural Kinds, W. V. Quine, in Ontological Relativity & Other Essays, Columbia University Press (1969)

After a brief stint as a cosmologist, I wound up at the interface of data science and cybersecurity, thinking about ways of applying machine learning and big data analytics to detect cyber attacks. I still enjoy thinking and learning about the universe, and Physics Forums has been a great way to stay engaged. I like to read and write about science, computers, and sometimes, against my better judgment, philosophy. I like beer, cats, books, and one spectacular woman who puts up with my tomfoolery.

I really thank the author for an excellent read.

Induction IMO degenerates to solipsism and then your just having a boring talk to yourself.

I am happy to just assume we all share a common external reality that we can study reliably. The rest, not so much.

And part two is very good. Well worth a careful read.

Part two has been published

[URL]https://www.physicsforums.com/insights/scientific-inference-come-know-stuff-ii/[/URL]

[QUOTE=”atyy, post: 5455062, member: 123698″]Is the principle of equivalence a form of induction, ie. the local laws of physics are everywhere the same?[/QUOTE]

Someone more of an expert in GR may adjust my answer, but there are some specific assertions in the principle of equivalence beyond the local laws of physics being the same everywhere. These assertions could potentially be falsified:

1. Gravitational mass is equal to inertial mass.

2. There is no experiment that can possibly distinguish a uniform acceleration from a uniform gravitational field.

[QUOTE=”atyy, post: 5455062, member: 123698″]Is the principle of equivalence a form of induction, ie. the local laws of physics are everywhere the same?[/QUOTE]

Any physical law that is a generalization through time or space (or both) of individual observed instances is an induction. So, yeah, the equivalence principle is a great example!

Science cannot really prove the constancy of the laws of nature. Science assumes the constancy of natural law.

Stephen Jay Gould put it this way:

Begin Exact Quote (Gould 1984, p. 11):

METHODOLOGICAL PRESUPPOSITIONS ACCEPTED BY ALL SCIENTISTS

1) The Uniformity of law – Natural laws are invariant in space and time. John Stuart Mill (1881) argued that such a postulate of uniformity must be invoked if we are to have any confidence in the validity of inductive inference; for if laws change, then an hypothesis about cause and effect gains no support from repeated observations – the law may alter the next time and yield a different result. We cannot “prove” the assumption of invariant laws; we cannot even venture forth into the world to gather empirical evidence for it. It is an a priori methodological assumption made in order to practice science; it is a warrant for inductive inference (Gould, 1965).

End Exact Quote (Gould 1984, p. 11)

Gould, Stephen Jay. “Toward the vindication of punctuational change.”[I]Catastrophes and earth history[/I] (1984): 9-16.

also see:

Gould, Stephen Jay. “Is uniformitarianism necessary?” [I]American Journal of Science[/I] 263.3 (1965): 223-228.

Gould, Stephen Jay. [I]Time’s arrow, time’s cycle: Myth and metaphor in the discovery of geological time[/I]. Harvard University Press, 1987.

Is the principle of equivalence a form of induction, ie. the local laws of physics are everywhere the same?

[QUOTE=”bapowell, post: 5454346, member: 217460″]Exactly. And this is where the problem of induction comes in: how are you able to know whether your knowledge will continue to be useful? Any expectation that it will be useful for some period of time presumes certain regularities about the universe. The challenge is identifying what these regularities are in every instance so that we can bolster our inductive reasoning.[/QUOTE]

It is fascinating to me that my favorite definition of energy ([URL=’http://physics.stackexchange.com/questions/59719/whats-the-real-fundamental-definition-of-energy’]Noetherian energy[/URL]) relies on the assumption of such regularities in the universe. This is so true, that to some extent modern scientific models are based on this underlying Lagrangian path. In other words, since the real (as in true) path’s derivative is (classically) defined as zero, any changes would show up as energy changes in the model.

Thus, to some extent, irregularities do exist as energy changing form. Of course we observe these changes and account for them in our models. Whenever we find a new change, we account for it as a new form of energy. Thus we change our model to match our changing universe.

[QUOTE=”bapowell, post: 5454346, member: 217460″]Any expectation that it will be useful for some period of time presumes certain regularities about the universe. The challenge is identifying what these regularities are in every instance so that we can bolster our inductive reasoning.[/QUOTE]

Hi Brian:

Part of USEFUL knowledge is our estimates about the regularities continuing to be regular. Logically this creates circularity concerning the truth, but with respect to usefulness this is perfectly reasonable.

Regards,

Buzz

[QUOTE=”Buzz Bloom, post: 5454189, member: 547865″] USEFUL knowledge comes with an estimate of how likely it is that the knowledge will continue to be useful for some useful period of time.

[/QUOTE]

Exactly. And this is where the problem of induction comes in: how are you able to know whether your knowledge will continue to be useful? Any expectation that it will be useful for some period of time presumes certain regularities about the universe. The challenge is identifying what these regularities are in every instance so that we can bolster our inductive reasoning.

[QUOTE=”PeterDonis, post: 5454207, member: 197831″]I don’t see the difference between a map and the subjective terrain; a map [I]is[/I] the “subjective terrain”–the representation of the terrain that you use as a guide.[/QUOTE]

Hi Peter:

I am not confident that the following explanation will be generally helpful, but it works for me.

OBJECTIVE reality is not knowable. SUBJECTIVE reality is the only reality that is knowable, and it is therefore USEFUL to consider it to be THE REALITY. Alternatively, it is also useful to go with the (existential ?) concept that THE REALITY is the link between subjective and objective reality. TRUE knowledge is not REALITY at all; it is ONLY a MAP.

Regards,

Buzz

[QUOTE=”Buzz Bloom, post: 5454200, member: 547865″]TRUE knowledge is only a map, but USEFUL knowledge IS the SUBJECTIVE terrain.[/QUOTE]

I don’t see the difference between a map and the subjective terrain; a map [I]is[/I] the “subjective terrain”–the representation of the terrain that you use as a guide.

[QUOTE=”PeterDonis, post: 5454194, member: 197831″]But these provable truths are only provable in the context of a particular set of abstract axioms, which might or might not actually apply to the real world.[/QUOTE]

Hi Peter:

Agreed. That is why TRUE knowledge is only a map, but USEFUL knowledge IS the SUBJECTIVE terrain. Both forms of knowledge are approximations of the OBJECTIVE terrain.

Regards,

Buzz

[QUOTE=”Buzz Bloom, post: 5454189, member: 547865″]TRUE knowledge (based on provable truth like pure math)[/QUOTE]

But these provable truths are only provable in the context of a particular set of abstract axioms, which might or might not actually apply to the real world. That is why Bertrand Russell (IIRC) said that to the degree that the statements of mathematics refer to reality, they are not certain, and to the degree that they are certain, they do not refer to reality.

[QUOTE=”bapowell, post: 5453312, member: 217460″]The problem is that confidence limits, and in fact all statistical inference, are based on the premise that induction works:[/QUOTE]

What works for me is the concept that a distinction exists between TRUE knowledge (based on provable truth like pure math) and USEFUL knowledge (based only on practical usefulness like the rest of science). USEFUL knowledge comes with an estimate of how likely it is that the knowledge will continue to be useful for some useful period of time.

Regards,

Buzz

[QUOTE=”Dr. Courtney, post: 5453322, member: 117790″]

So in pure math, I am comfortable with the map being the territory. But in natural science, the map is not the territory. To me, this is the fundamental distinction between pure math and natural science, and it tends to be under appreciated by students at most levels.[/QUOTE]

Even in pure math the answer isn’t clear to me. Gödel proved a disturbing theorem (the incompleteness theorem) which approaches this point tangentially. It is possible even some theorems in math depend on viewpoint, thus sense data.

But since the incompleteness theorem doesn’t apply directly, there’s the possibility it doesn’t apply, or more disturbingly only applies from some points of view.

I wish I was smart enough to understand rather than guess.

[QUOTE=”Dr. Courtney, post: 5453322, member: 117790″]

But I view the natural sciences like Hume: repeatable experiment alone is the ultimate arbiter, knowledge without sensory perception (experiment) is impossible, and since all measurement has uncertainty, so does all theory.

[/QUOTE]

Kant would agree with you. He held that knowledge was either analytic (true by logical necessity) or synthetic (knowable only through experience). Scientific knowledge is in the latter category.

[QUOTE=”Jeff Rosenbury, post: 5453292, member: 476307″]Kant (1724-1804) argued that a priori knowledge exists. That is that some things can be known before [without] reference to our senses. Hume (1711-1776) argued that only a posteriori knowledge exists; knowledge is gained exclusively after perception through senses.

Hume promoted a more pure form of empiricism where passion ruled while Kant believed rational thought gave form to our observations.

So Hume would point out that any “gut feeling” is just that. Perhaps with more observation, the confidence limit could approach zero, but it couldn’t ever reach zero.

Kant might argue that with proper math, the limit could be made arbitrarily small. But the key would be well reasoned math and a good model.

So there’s an open question about the relationship between “the map and the territory”. Can a model be made so precise that it becomes the territory? Or in more modern terms, do we live in The Matrix? In a digital universe, the two can be identical. In a chaotic universe (in the mathematical sense), they cannot be.

IMO, science today should assume the least restrictive option, that the map is not the territory. Models represent reality, but are not reality. Perhaps that is not true, but assuming the opposite could lead to errors where we assume a poor map is better than it is.

Still, this borders on matters of faith, and peoples’ basic motivations in persuing science. I would not to discourage others from seeking their own truths.[/QUOTE]

Well said, and well explained. Thanks.

I tend to view pure math like Kant: given Euclid’s axioms, the whole of Euclidean geometry can be produced with certainty without regard for sensory perception.

But I view the natural sciences like Hume: repeatable experiment alone is the ultimate arbiter, knowledge without sensory perception (experiment) is impossible, and since all measurement has uncertainty, so does all theory.

So in pure math, I am comfortable with the map being the territory. But in natural science, the map is not the territory. To me, this is the fundamental distinction between pure math and natural science, and it tends to be under appreciated by students at most levels.

[QUOTE=”Buzz Bloom, post: 5453177, member: 547865″]Hi Brian:

I am not sure I understand what the above means. When science gives a calculated confidence level with a prediction/measurement, isn’t that the same thing as knowing the degree of imperfection? I do not know Hume’s history, so an explanation might be that he was unaware of probability theory.

[/QUOTE]

The problem is that confidence limits, and in fact all statistical inference, are based on the premise that induction works: that we can generalize individual measurements here and now to universal law. The tools of statistical inference are used to quantify our uncertainty under the presumption that the thing being measured is projectable, but, as I discuss in the note, it’s not always easy to know that you’re projecting the right thing (that you’re making the right inductive inference.) As I hope to show over the course of the next few notes, we can still make progress in science despite these limitations; and yes, statistics has much to say on it (though not the sampling statistics we use to summarize our data…)

Kant (1724-1804) argued that a priori knowledge exists. That is that some things can be known before [without] reference to our senses. Hume (1711-1776) argued that only a posteriori knowledge exists; knowledge is gained exclusively after perception through senses.

Hume promoted a more pure form of empiricism where passion ruled while Kant believed rational thought gave form to our observations.

So Hume would point out that any “gut feeling” is just that. Perhaps with more observation, the confidence limit could approach zero, but it couldn’t ever reach zero.

Kant might argue that with proper math, the limit could be made arbitrarily small. But the key would be well reasoned math and a good model.

So there’s an open question about the relationship between “the map and the territory”. Can a model be made so precise that it becomes the territory? Or in more modern terms, do we live in The Matrix? In a digital universe, the two can be identical. In a chaotic universe (in the mathematical sense), they cannot be.

IMO, science today should assume the least restrictive option, that the map is not the territory. Models represent reality, but are not reality. Perhaps that is not true, but assuming the opposite could lead to errors where we assume a poor map is better than it is.

Still, this borders on matters of faith, and peoples’ basic motivations in persuing science. I would not to discourage others from seeking their own truths.

[QUOTE=”Buzz Bloom, post: 5453177, member: 547865″]

I am not sure I understand what the above means. When science gives a calculated confidence level with a prediction/measurement, isn’t that the same thing as knowing the degree of imperfection? I do not know Hume’s history, so an explanation might be that he was unaware of probability theory.

[/QUOTE]

Every measurement in science in an estimated value rather than a perfectly determined value, and every confidence level or set of error bars is also an estimate.

You usually have to read the fine print in a published paper to determine how error estimates are calculated, on what assumptions the confidence levels are based, etc. Common assumptions that may not always be true are random errors and a normal distribution of measurement errors.

So in the language of your question, “the degree of imperfection” is not “known” exactly, it is only estimated, and that estimation is based on assumptions that may not always be true.

Although most of my own published papers include error bars and confidence levels, in most cases we only use one technique (the most common in the field we’re working in) to determine the error estimates we include in the paper. We do commonly estimate uncertainties with other available techniques which I take to provide some ballpark estimates on how accurate the error estimates themselves might be. It is nearly universal for different approaches to estimating uncertainties to yield slightly different results. One approach might suggest 1% uncertainty, while another approach might suggest 2%. I end up with a “gut feeling” that the actual uncertainty is likely between 1-2%.

[QUOTE=”bapowell, post: 5453170, member: 217460″]the problem of induction shows that we cannot know how imperfect it is.[/QUOTE]

Hi Brian:

I am not sure I understand what the above means. When science gives a calculated confidence level with a prediction/measurement, isn’t that the same thing as knowing the degree of imperfection? I do not know Hume’s history, so an explanation might be that he was unaware of probability theory.

Regards,

Buzz

[QUOTE=”Buzz Bloom, post: 5452306, member: 547865″]Hi Brian:

I much like your presentation.

It occurs to me that Hume’s argument is based on an assumed definition about what knowledge is. The assumption seems to be

[INDENT]”Knowledge” is a belief for which there is [U]certainty[/U] that the belief is correct/true.[/INDENT]

[/QUOTE]

[INDENT]Thank you Buzz. I’m not sure that this is the kind of knowledge that Hume has in mind. Though his work predates Kant’s delineation between “analytic” and “synthetic” knowledge (that is, the distinction between logical and empirical truths), Hume understood that knowledge about the world must be ascertained by observing it. After all, he was one of the founders of empiricism. This is why he tangles with induction: it’s the only way to acquire knowledge through experience. Accepting that such knowledge is never perfect, the problem of induction illustrates that we cannot, in general, know how “good” our knowledge is. So Hume accepts that knowledge is imperfect; the problem of induction shows that we cannot know how imperfect it is.[/INDENT]

Dr. Courtney:

Whether students agreeing with the consensus is a good measure depends on one’s view of the purpose of education. Early 20th century education reformers like Dewy saw the purpose of education as the promotion of political unity. It’s not clear to me that has changed.

As Brian Powell points out, truth is a slippery concept. Political unity is much easier to understand.

Hi Brian:

I much like your presentation.

It occurs to me that Hume’s argument is based on an assumed definition about what knowledge is. The assumption seems to be

[INDENT]”Knowledge” is a belief for which there is [U]certainty[/U] that the belief is correct/true.[/INDENT]

It is the kind of knowledge associated with the theorems of pure math (and perhaps also theology). Even if we ignore that erroneous proofs of theorems which have in the past occasionally fooled mathematicians, this definition is completely incompatible with the concept of scientific knowledge, in which absolute certainty is recognized as always impossible. All scientific knowledge (excluding pure math knowledge) comes with a level of confidence which is less than certainty.

Note: As an example of a widely accepted for a decade false proof of a theorem, see

[INDENT][URL]http://www.bing.com/knows/search?q=Four%20color%20theorem&mkt=zh-cn[/URL].[/INDENT]

Here is a quote:

[INDENT]One alleged proof was given by Alfred Kempe in 1879, which was widely acclaimed… It was not until 1890 that Kempe’s proof was shown incorrect by Percy Heawood.[/INDENT]

Regards,

Buzz

[QUOTE=”voila, post: 5451131, member: 557068″]Dr. Courtney:

It’s not about whether theories are “really true”. They are not, for they are supported by inference and predictions (deductions only work within an inference theory framework). It’s about knowing the limits of the knowledge itself. [/QUOTE]

This viewpoint is abandoned in most cases where the scientific claims have political or policy implications. No one is threatened by the physicist who doesn’t believe Newton’s laws, Quantum Mechanics, or General Relativity are “really true” (in nearly every sense that physicists seem to make these claims).

But lots of folks are threatened with theories with greater political import or public policy implications are challenged (vaccinations, evolution, climate change, etc.) Very few scientists are willing to acknowledge that these “theories” may not really be true, but are only approximations.

This gives rise to Courtney’s law: the accuracy of the error estimates are inversely proportional to the public policy implications.

And the corollary: the likelihood of a claim for a theory to be absolutely true is directly proportional to the public policy implications.

I tend to sense a different epistemology at work when the conversation shifts from how well a theory is supported by data and repeatable experiment to arguments from authority citing how many “experts” support a given view.

Scientific inference and how we come to know stuff should be the same for theories with relatively little policy implications as it is for theories with broad and important policy implications. The way most science with policy implications is presented to the public (and often taught in schools) suggests the rules of inference and how we come to know stuff are rather flexible, when it really should not be.

When the success of education is judged by whether students agree with scientific consensus on most issues rather than by whether they really grasp how scientific inference and how we come to know stuff really work, education is bound to fail, because the metric for determining success is fundamentally flawed.

[quote=”PeterDonis, post: 5450416″]A minor nitpick: equation (1) does not evaluate to 1 for ##n = 0##, and it does evaluate to 11 for ##n = 5##. As you’ve written it, it should have ##2n – 1## at the end and be evaluated starting at ##n = 1##. Or, if you want to start with ##n = 0##, the final ##+1## in each of the five factors in parentheses should be a ##-1##.[/quote]Thanks and fixed!

Dr. Courtney:

It’s not about whether theories are “really true”. They are not, for they are supported by inference and predictions (deductions only work within an inference theory framework). It’s about knowing the limits of the knowledge itself. And by “It” I mean the work of the physicist. Phenomenological descriptions of the world are the goal of the physicist, regardless of implications; might as well know the basis of the work.

PS: Of course, a lot of physicists work on life-improving applications of this or that, but I meant to center on the “pure” side of things. Knowledge for knowledge’s sake. That necessarily includes the methodology itself.

I gave up worrying about whether or not a scientific theory is really true (in the mathematical sense) some time in college. I am only concerned about whether the predictions are accurate (and how accurate) and how well the known boundaries of application are.

No well educated physicist pretends that Newton’s laws are rigorously true or exact (in light of quantum mechanics and relativity). But they are certainly good enough for 99% of mechanical engineering tasks. Improving our lives does not require any scientific theory to be rigorously “true” only that they work “well enough.”

As a practical matter “well enough” usually means being some measure more accurate than the theory or model being replaced.

Brilliant stuff Brian!

A minor nitpick: equation (1) does not evaluate to 1 for ##n = 0##, and it does evaluate to 11 for ##n = 5##. As you’ve written it, it should have ##2n – 1## at the end and be evaluated starting at ##n = 1##. Or, if you want to start with ##n = 0##, the final ##+1## in each of the five factors in parentheses should be a ##-1##.

This is great stuff. This is must read for any scientist. Sadly, most ignore these kind of questions.

Personally, I adhere to Kuhn’s view of science, so I’m looking forward to what you’ll say about him!

Is the principle of equivalence a form of induction, ie. the local laws of physics are everywhere the same?

Kant (1724-1804) argued that a priori knowledge exists. That is that some things can be known before [without] reference to our senses. Hume (1711-1776) argued that only a posteriori knowledge exists; knowledge is gained exclusively after perception through senses. Hume promoted a more pure form of empiricism where passion ruled while Kant believed rational thought gave form to our observations. So Hume would point out that any "gut feeling" is just that. Perhaps with more observation, the confidence limit could approach zero, but it couldn't ever reach zero. Kant might argue that with proper math, the limit could be made arbitrarily small. But the key would be well reasoned math and a good model. So there's an open question about the relationship between "the map and the territory". Can a model be made so precise that it becomes the territory? Or in more modern terms, do we live in The Matrix? In a digital universe, the two can be identical. In a chaotic universe (in the mathematical sense), they cannot be. IMO, science today should assume the least restrictive option, that the map is not the territory. Models represent reality, but are not reality. Perhaps that is not true, but assuming the opposite could lead to errors where we assume a poor map is better than it is. Still, this borders on matters of faith, and peoples' basic motivations in persuing science. I would not to discourage others from seeking their own truths.

Dr. Courtney:Whether students agreeing with the consensus is a good measure depends on one's view of the purpose of education. Early 20th century education reformers like Dewy saw the purpose of education as the promotion of political unity. It's not clear to me that has changed. As Brian Powell points out, truth is a slippery concept. Political unity is much easier to understand.

Thanks and fixed!

Dr. Courtney:It's not about whether theories are "really true". They are not, for they are supported by inference and predictions (deductions only work within an inference theory framework). It's about knowing the limits of the knowledge itself. And by "It" I mean the work of the physicist. Phenomenological descriptions of the world are the goal of the physicist, regardless of implications; might as well know the basis of the work.PS: Of course, a lot of physicists work on life-improving applications of this or that, but I meant to center on the "pure" side of things. Knowledge for knowledge's sake. That necessarily includes the methodology itself.

I gave up worrying about whether or not a scientific theory is really true (in the mathematical sense) some time in college. I am only concerned about whether the predictions are accurate (and how accurate) and how well the known boundaries of application are.No well educated physicist pretends that Newton's laws are rigorously true or exact (in light of quantum mechanics and relativity). But they are certainly good enough for 99% of mechanical engineering tasks. Improving our lives does not require any scientific theory to be rigorously "true" only that they work "well enough." As a practical matter "well enough" usually means being some measure more accurate than the theory or model being replaced.

Brilliant stuff Brian!