Struggles With the Continuum: Is Spacetime Really a Continuum?

Is spacetime really a continuum? That is, can points of spacetime really be described—at least locally—by lists of four real numbers ##(t,x,y,z)##? Or is this description, though immensely successful so far, just an approximation that breaks down at short distances?

Rather than trying to answer this hard question, let’s look back at the struggles with the continuum that mathematicians and physicists have had so far.

The worries go back at least to Zeno. Among other things, he argued that an arrow can never reach its target:

That which is in locomotion must arrive at the half-way stage before it arrives at the goal. — Aristotle summarizing Zeno

and Achilles can never catch up with a tortoise:

In a race, the quickest runner can never overtake the slowest, since the pursuer must first reach the point whence the pursued started, so that the slower must always hold a lead. — Aristotle summarizing Zeno

These paradoxes can now be dismissed using our theory of real numbers. An interval of finite length can contain infinitely many points. In particular, a sum of infinitely many terms can still converge to a finite answer.

But the theory of real numbers is far from trivial. It became fully rigorous only considerably after the rise of Newtonian physics. At first, the practical tools of calculus seemed to require infinitesimals, which seemed logically suspect. Thanks to the work of Dedekind, Cauchy, Weierstrass, Cantor, and others, a beautiful formalism was developed to handle real numbers, limits, and the concept of infinity in a precise axiomatic manner.

However, the logical problems are not gone. Gödel’s theorems hang like a dark cloud over the axioms of mathematics, assuring us that any consistent theory as strong as Peano arithmetic, or stronger, cannot prove itself consistent. Worse, it will leave some questions unsettled.

For example: how many real numbers are there? The continuum hypothesis proposes a conservative answer, but the usual axioms of set theory leaves this question open: there could vastly more real numbers than most people think. And the superficially plausible axiom of choice—which amounts to saying that the product of any collection of nonempty sets is nonempty—has scary consequences, like the existence of non-measurable subsets of the real line. This in turn leads to results like that of Banach and Tarski: one can partition a ball of the unit radius into six disjoint subsets, and by rigid motions reassemble these subsets into two disjoint balls of unit radius. (Later it was shown that one can do the job with five, but no fewer.)

However, most mathematicians and physicists are inured to these logical problems. Few of us bother to learn about attempts to tackle them head-on, such as:

- nonstandard analysis and synthetic differential geometry, which let us work consistently with infinitesimals,

- constructivism, which avoids proof by contradiction: for example, one must ‘construct’ a mathematical object to prove that it exists,

- finitism (which avoids infinities altogether),

- ultrafinitism, which even denies the existence of very large numbers.

This sort of foundational work proceeds slowly and is now deeply unfashionable. One reason is that it rarely seems to intrude in ‘real life’ (whatever that is). For example, it seems that no question about the experimental consequences of physical theories has an answer that depends on whether or not we assume the continuum hypothesis or the axiom of choice.

But even if we take a hard-headed practical attitude and leave logic to the logicians, our struggles with the continuum are not over. In fact, the infinitely divisible nature of the real line—the existence of arbitrarily small real numbers—is a serious challenge to almost all of the most widely used theories of physics.

Indeed, we have been unable to rigorously prove that most of these theories make sensible predictions in all circumstances, thanks to problems involving the continuum.

One might hope that a radical approach to the foundations of mathematics—such as those listed above—would allow avoiding some of the problems I’ll be discussing. However, I know of no progress along these lines that would interest most physicists. Some of the ideas of constructivism have been embraced by topos theory, which also provides a foundation for calculus with infinitesimals using synthetic differential geometry. Topos theory and especially higher topos theory are becoming important in mathematical physics. They’re great! But as far as I know, they have not been used to solve the problems I want to discuss here.

Today I’ll talk about one of the first theories to use calculus: Newton’s theory of gravity.

Newtonian Gravity

In its simplest form, Newtonian gravity describes ideal point particles attracting each other with a force inversely proportional to the square of their distance. It is one of the early triumphs of modern physics. But what happens when these particles collide? Apparently, the force between them becomes infinite. What does Newtonian gravity predict then?

Of course, real planets are not points: when two planets come too close together, this idealization breaks down. Yet if we wish to study Newtonian gravity as a mathematical theory, we should consider this case. Part of working with a continuum is successfully dealing with such issues.

In fact, there is a well-defined ‘best way’ to continue the motion of two-point masses through a collision. Their velocity becomes infinite at the moment of collision but is finite before and after. The total energy, momentum, and angular momentum are unchanged by this event. So, a 2-body collision is not a serious problem. But what about a simultaneous collision of 3 or more bodies? This seems more difficult.

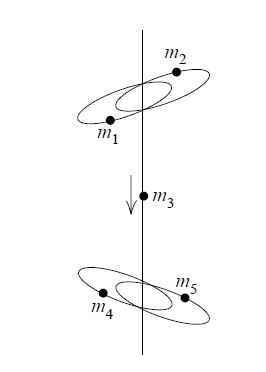

Worse than that, Xia proved in 1992 that with 5 or more particles, there are solutions where particles shoot off to infinity in a finite amount of time!

This sounds crazy at first, but it works like this: a pair of heavy particles orbit each other, another pair of heavy particles orbit each other, and these pairs toss a lighter particle back and forth. Xia and Saari’s nice expository article has a picture of the setup.

Each time the lighter particle gets thrown back and forth, the pairs move further apart from each other, while the two particles within each pair get closer together. And each time they toss the lighter particle back and forth, the two pairs move away from each other faster!

As the time ##t## approaches a certain value ##t_0##, the speed of these pairs approaches infinity, so they shoot off to infinity in opposite directions in a finite amount of time, and the lighter particle bounces back and forth an infinite number of times!

Of course, this crazy behavior isn’t possible in the real world, but Newtonian physics has no ‘speed limit’, and we’re idealizing the particles as points. So, if two or more of them get arbitrarily close to each other, the potential energy they liberate can give some particles enough kinetic energy to zip off to infinity in a finite amount of time! After that time, the solution is undefined.

You can think of this as a modern reincarnation of Zeno’s paradox. Suppose you take a coin and put it heads up. Flip it over after 1/2 a second, then flip it over after 1/4 of a second, and so on. After one second, which side will be up? There is no well-defined answer. That may not bother us, since this is a contrived scenario that seems physically impossible. It’s a bit more bothersome than Newtonian gravity doesn’t tell us what happens to our particles when ##t = t_0##.

You might argue that collisions and these more exotic ‘noncollision singularities’ occur with probability zero because they require finely-tuned initial conditions. If so, perhaps we can safely ignore them!

This is a nice fallback position. But to a mathematician, this argument demands proof.

A bit more precisely, we would like to prove that the set of initial conditions for which two or more particles come arbitrarily close to each other within a finite time has ‘measure zero’. This would mean that ‘almost all’ solutions are well-defined for all times, in a very precise sense.

In 1977, Saari proved that this is true for 4 or fewer particles. However, to the best of my knowledge, the problem remains open for 5 or more particles. Thanks to previous work by Saari, we know that the set of initial conditions that lead to collisions has measure zero, regardless of the number of particles. So, the remaining problem is to prove that noncollision singularities occur with probability zero.

It is remarkable that even Newtonian gravity, often considered a prime example of determinism in physics, has not been proved to make definite predictions, not even ‘almost always’! In 1840, Laplace wrote:

We ought to regard the present state of the universe as the effect of its antecedent state and as the cause of the state that is to follow. An intelligence knowing all the forces acting in nature at a given instant, as well as the momentary positions of all things in the universe, would be able to comprehend in one single formula the motions of the largest bodies as well as the lightest atoms in the world, provided that its intellect were sufficiently powerful to subject all data to analysis; to it nothing would be uncertain, the future as well as the past would be present to its eyes. The perfection that the human mind has been able to give to astronomy affords but a feeble outline of such an intelligence. — Laplace

However, this dream has not yet been realized for Newtonian gravity.

I expect that noncollision singularities will be proved to occur with probability zero. If so, the remaining question would why it takes so much work to prove this, and thus prove that Newtonian gravity makes definite predictions in almost all cases. Is this is a weakness in the theory or just the way things go? Clearly, it has something to do with three idealizations:

- point particles whose distance can be arbitrarily small,

- potential energies that can be arbitrarily large and negative,

- velocities that can be arbitrarily large.

These are connected: as the distance between point particles approaches zero, their potential energy approaches ##-\infty##, and conservation of energy dictates that some velocities approach ##+\infty##.

Does the situation improve when we go to more sophisticated theories? For example, does the ‘speed limit’ imposed by special relativity help the situation? Or might quantum mechanics help, since it describes particles as ‘probability clouds’, and puts limits on how accurately we can simultaneously know both their position and momentum?

In Part 2 I’ll talk about quantum mechanics, which indeed does help.

I’m a mathematical physicist. I work at the math department at U. C. Riverside in California, and also at the Centre for Quantum Technologies in Singapore. I used to do quantum gravity and n-categories, but now I mainly work on network theory and the Azimuth Project, which is a way for scientists, engineers and mathematicians to do something about the global ecological crisis.

[QUOTE="Mark Harder, post: 5587655, member: 528112"]Are there physical observations with probability 0 'almost surely' that are in fact observed?[/QUOTE]If you could exactly measure the position of a particle, the probability of getting any specific value would be zero, since the measure ## |psi|^2 d^n x## is absolutely continuous. But of course it's unrealistic to act as if we exactly measure the position of a particle. A somewhat more realistic attitude is that we measure whether it's in some open set or not. Even this is an idealization of the true situation, but at least it means that the answer "yes, it's in the set" usually has probability ## gt 0##.There are lengthy discussions of these issues in the literature on probability theory and quantum mechanics; I'm just saying some of the usual first remarks.

Stephen, proofs in probability theory often conclude that a result is certain or impossible 'almost surely', meaning that the probability of the truth of a conclusion is 1 or 0. Are there physical observations with probability 0 'almost surely' that are in fact observed? My understanding of probability 'density' distributions is that the probability of an exact value of the random variable is always zero. What is not zero is the product of the probability density and an interval in its domain. I guess that's the catch: what is the meaning of this 'interval'. It must be a set of non-zero measure, it seems; but doesn't the topology of the interval matter – whether it's connected or not, for instance. Reference https://www.physicsforums.com/insights/struggles-continuum-part-1/

[QUOTE=”WWGD, post: 5230033, member: 69719″]Excellent post!

Re the Banach-Tarski paradox, I think the construction assumes the existence of an uncountable number of atoms in order to do the specified partition. Since atoms are ( AFAIK) known to be finite, this construction is not possible in our universe.[/QUOTE]

It would also require an infinite number of cutting operations.

[quote]It is remarkable that even Newtonian gravity, often considered a prime example of determinism in physics, has not been proved to make definite predictions, not even ‘almost always’! […] Does the situation improve when we go to more sophisticated theories? For example, does the ‘speed limit’ imposed by special relativity help the situation?[/quote]

One of the classic examples of indeterminism in Newtonian mechanics is Norton’s dome. There are indeed some indications (nothing definitive yet, it seems) that relativity might cure the problem:

Jon Pérez Laraudogoitia, “On Norton’s dome,” Synthese, 2012 [URL=’http://link.springer.com/content/pdf/10.1007%2Fs11229-012-0105-z’]http://link.springer.com/content/pdf/10.1007/s11229-012-0105-z[/URL]

[quote]But the theory of real numbers is far from trivial. It became fully rigorous only considerably after the rise of Newtonian physics. At first, the practical tools of calculus seemed to require infinitesimals, which seemed logically suspect. Thanks to the work of Dedekind, Cauchy, Weierstrass, Cantor and others, a beautiful formalism was developed to handle real numbers, limits, and the concept of infinity in a precise axiomatic manner.[/quote]

You might be interested in this historical paper:

Blaszczyk, Katz, and Sherry, Ten Misconceptions from the History of Analysis and Their Debunking, [URL]http://arxiv.org/abs/1202.4153[/URL]

They claim that the conventional wisdom about a lot of the history was wrong. In particular, they claim that there was a rigorous construction of the reals ca. 1600.

[QUOTE=”Stephen Tashi, post: 5215849, member: 186655″]I’m curious whether the continuum model creates a fundamental problem in applying probability theory to physics.[/QUOTE]

A different type of question, but probability and continuum-related is : is entropy fundamental?

If entropy is fundamental, then it seems that the continuum cannot be fundamental, since the entropy is difficult to define for continuous quantities. For example, one may need “special coordinates”, such as canonical coordinates in Hamiltonian mechanics where the Jacobian determinant is 1.

If things are continuous, could it be that the mutual information or relative entropy is more fundamental than the entropy?

[QUOTE=”john baez, post: 5223989, member: 8778″] For both collisions and noncollision singularities, the distance between certain pairs of particles becomes arbitrarily close to zero as ##t to t_0##, where ##t_0## is the moment of disaster. So, if you made them finite-sized, they’d bump into each other and you’d have to decide what they did then. This seems more difficult than helpful.

[/QUOTE]

With the right mathematical definition of convergence, we wouldn’t have to worry about the result of a collision of finite objects. Thinking of a sequence of trajectories as a sequence of functions, we don’t have insist on “uniform convergence” to a limit. Pointwise convergence would do. In other words , suppose trajectory f[n] is close to the limiting trajectory for times less than collision time tzero[n] and f[n] is arbitrarily defined for times greater than tzero[n] ( and perhaps not close to the limiting trajectory at those times). As n approaches infinity we would expect tzero[n] to approach infinity or to approach whatever time there is a collision in the point particle model of the system.

If one describes the Goedel incompleteness theorems as dark clouds, doesn’t that in some sense favour the continuum since although the natural numbers and their arithmetic isn’t decidable, the theory of real closed fields is decidable (according to Wikipedia)? [URL]https://en.wikipedia.org/wiki/Decidability_of_first-order_theories_of_the_real_numbers[/URL]

Edit: Is finite dimensional quantum mechanics decidable? QM has a “continuum” part which is the “complex” and a discrete part which is the Hilbert space dimension.

[QUOTE=”Stephen Tashi, post: 5223751, member: 186655″]In the case of results for point particles, I’m curious if any conclusions change if we first solve a dynamics problem for objects of a finite size and then take the limit of the solution as the particle size approaches zero (instead of beginning with point particles at the outset).[/QUOTE]

For point particles interating by Newtonian gravity, I’m not aware of any calculations like this. For both collisions and noncollision singularities, the distance between certain pairs of particles becomes arbitrarily close to zero as ##t to t_0##, where ##t_0## is the moment of disaster. So, if you made them finite-sized, they’d bump into each other and you’d have to decide what they did then. This seems more difficult than helpful.

On the other hand, for understanding a [I]single [/I]relativistic charged particle interacting with the electromagnetic field, a lot of famous physicists have done a lot of calculations where they assume it’s a small sphere and then (sometimes) take the limit where the radius goes to zero. I’ll talk about that in Part 3.

[QUOTE=”john baez, post: 5223136, member: 8778″]Probably nothing to do with the unsolvability of the quintic.[/QUOTE]

Thanks. I figured it was a long shot, but the numbers stood out so was worth asking. Every now and then there are some deep and surprising connections popping up.

When we have a function of several variables and take a limit as these variables approach values, there are situations in mathematics where the order of taking the limit matters. It’s also possible to define limits where some relation is enforced between two variables (e.g. let x and y approach zero but insist that y = 2x).

In the case of results for point particles, I’m curious if any conclusions change if we first solve a dynamics problem for objects of a finite size and then take the limit of the solution as the particle size approaches zero (instead of beginning with point particles at the outset). Of course, “limit of the solution” is a more complicated concept that “limit of a real valued function”. It would involve the concept of a sequence of trajectories approaching a limiting trajectory.

One possibility is that ambiguity might increase. For example, if we have two spherical particles X and Y and take the limit of the solution under the condition that the diameter of Y is always twice the diameter of X as both diameters approach zero then is it possible we could get a different solution than if take the limit with the condition that both have the same diameter? Perhaps we would get different results using oblong shaped objects than spherical objects. If ambiguity increases then this suggests that the concept of a point particle as a limit of a sequence of finite particles is ambiguous.

[QUOTE=”Anama Skout, post: 5223185, member: 570127″]But even if they have a neutral charge, since there will always be a cloud of electrons in each they will eventually repel.[/QUOTE]

It sounds like you’re talking about quantum mechanics now, maybe: a neutral atom with a bunch of electrons? We were talking about Newtonian mechanics… but the quantum situation is discussed in my [URL=’https://www.physicsforums.com/insights/struggles-continuum-part-2/’]next post[/URL], and quantum mechanics changes everything.

[QUOTE=”john baez, post: 5223183, member: 8778″]If the particles have the same charge, they will repel. And for electrons, this repulsive force will [I]always [/I]exceed the force of gravity, by a factor of roughly 10[SUP]36[/SUP]. You see, since both the electrostatic force and gravity obey an inverse square law, it doesn’t matter how far the electrons are: the repulsive force [I]always[/I] wins, in this case.[/QUOTE]

But even if they have a neutral charge, since there will always be a cloud of electrons in each they will eventually repel.

[QUOTE=”john baez, post: 5223183, member: 8778″]But if the particles have opposite charges, the electrostatic force will make them attract even more![/QUOTE]

Ah yes! Didn’t think about that case.

[QUOTE=”Anama Skout, post: 5223152, member: 570127″]Great article again! :wink:[/QUOTE]

[QUOTE]I have a question: In Newtonian physics, if we want to model the collision of two particles why don’t we also assume that as the particles approach another force comes into play (namely the EM force) since as the particles approach they will never touch each other, and instead they will repel each other due to EM? [/QUOTE]

If the particles have the same charge, they will repel. And for electrons, this repulsive force will [I]always [/I]exceed the force of gravity, by a factor of roughly 10[SUP]36[/SUP]. You see, since both the electrostatic force and gravity obey an inverse square law, it doesn’t matter how far the electrons are: the repulsive force [I]always[/I] wins, in this case.

But if the particles have opposite charges, the electrostatic force will make them attract even more!

Great article again! :wink: I have a question: In Newtonian physics, if we want to model the collision of two particles why don’t we also assume that as the particles approach another force comes into play (namely the EM force) since as the particles approach they will never touch each other, and instead they will repel each other due to EM? That would – as far as I know – mean that the force wont be infinite.

(Loosy analogy: Imagine two rocks getting attracted to each other, as they get more and more close the electrons in the surface of each will repel each other…)

[QUOTE=”Lord Crc, post: 5223078, member: 70582″]The lack of solutions for 5 or more particles isn’t related to the Abel–Ruffini theorem? Or is it just a (temporary) coincidence?[/QUOTE]

Probably nothing to do with the unsolvability of the quintic. Right now the situation is this:

[LIST]

[*]With 5 particles we know it’s possible for Newtonian point particles interacting by gravity to shoot off to infinity in finite time without colliding.

[*]With 4 particles nobody knows.

[*]With 3 or fewer particles it’s impossible.

[/LIST]

and:

[LIST]

[*]With 3 or fewer particles, we know the motion of the particles will be well-defined for all times “with probability 1” (i.e., except on a set of measure zero).

[*]For 4 or more particles nobody knows.

[/LIST]

The lack of solutions for 5 or more particles isn’t related to the Abel–Ruffini theorem? Or is it just a (temporary) coincidence?

[QUOTE=”Telemachus, post: 5216305, member: 245368″]Wow, didn’t know this guy posted on this forum! great insight btw[/QUOTE]

Thanks! I’ve posted before in the “Beyond the Standard Model” section, but Physics Forums came after the days when I enjoyed discussing physics endlessly on online forums – if you look at old sci.physics.research posts, you’ll see me in my heyday.

[QUOTE=”john baez, post: 5216272, member: 8778″]

The sense of “paradox” one might feel about this can also be raised by this question: “how can a region of nonzero volume be made of points, each of which has volume zero?”

Mathematically these two problems are the same.

[/QUOTE]

Yes, if we leave out the question of “actuality” then what’s left is handled by measure theory – in physical terms, its the same as dealing with phenomena that the mass of an object “at a point” is zero and yet the whole object has a nonzero mass. We can define “mass density” and say the “mass density” at a point in the object is nonzero.

However, if we want to experimentally determine (i.e. estimate) the mass density of an object at a point there is no theoretical problem in imagining that we take a “small” chunk of the object around the point, measure its mass and divide the mass by the chunk’s volume.

However, suppose we try to apply a similar technique to estimating the value of a probability density function at a point in a continuum. The natural theoretical approach is that we take zillions of samples from the distribution, we see what fraction of the samples lie within a small interval that contains the point, and we divide the fraction by the length of the interval. But this theoretical approach assumes we can “actually” take samples with exact values in the first place. By contrast, the estimation of mass density didn’t require assuming we could directly measure values associated with single points.

[QUOTE=”eltodesukane, post: 5216740, member: 394501″]It is kind of strange that the (probably) discrete spacetime is approximated by continuous mathematics (Real Numbers), which are usually computed using a discrete floating point datatype.[/QUOTE]

That is indeed an amusing irony… But also a fairly new one. Before the digital computer, before William Kahan and the IEEE floating point standard (which none of us appreciate as much as we should), there was the slide rule.

Excellent post!Re the Banach-Tarski paradox, I think the construction assumes the existence of an uncountable number of atoms in order to do the specified partition. Since atoms are ( AFAIK) known to be finite, this construction is not possible in our universe.

wonderful read. thanks!

The last sentence of my post was:> Next time I’ll talk about quantum mechanics, which indeed does help.

But, all the dilemmas that you propose ¿can´t be disappear with quantum mechanics?

It is kind of strange that the (probably) discrete spacetime is approximated by continuous mathematics (Real Numbers), which are usually computed using a discrete floating point datatype.

The most subtle aspect of the question is the "coordination" step (http://ncatlab.org/nlab/show/coordination) that takes one from a mathematical formalization of some physics to the correspinding perception of concious observers living in that physical world. It is not a priori clear that if a mathematical theory models space(time) as a continuum, that this is also what observes in this world will perceive.Take the example of phase space. Common lore has it that after quantization it turns from a continuous smooth manifold into a non-commutative space. This assertion is what much of the efforts towards noncommutative models of spacetime takes its motivation from. But it is not unambiguously true!Namely the folklore about phase space becoming a nononcommutative space draws from one of two available formalizations of quantization, which is algebraic deformation quantization (http://ncatlab.org/nlab/show/deformation+quantization). There is another formalization, which may be argued to be more fundamental, as it is less tied to the perturbative regime: this is geometric quantization (ncatlab.org/nlab/show/geometric+quantization) (John B. knows all this, of course, I am including references only for this comment to be somewhat self-contained just for the sake of other readers).Now in geometric quantization there is no real sense in which phase space would become a non-commutative space. It remains perfecty a continuous smooth manifold (infinite-dimensional, in general). What appears as a non-commutative deformation of the continuum in deformation quantization is in geometric quantization instead simply the action of a nonabelian group of Hamiltonian symmetries (conserved Noether currents, in field theory).on the continuous smooth phase space. And yet, where there realm of definition overlaps, both these quantization procedures describe the same reality, the same standard quantum world.That is incidentally what the infinity-topos persepctive thankfully mentioned in the article above, makes use of: geometric quantization, as the name suggests, is intrinsically differential geometric, and as such lends itself to be formulated in the higher differential geometry embodied by cohesive infinity-toposes.

Wow, didn't know this guy posted on this forum! great insight btw. So many things we don't know about the nature of space time which rely in the deepest concepts of all physics. I saw some of your videos about your favourite numbers, you are great sir.Regards.

Good questions! Clearly events with probability zero cannot be considered "impossible" if we allow probability distributions on the real line where each individual point has measure zero, yet the outcome must be some point or other. The sense of "paradox" one might feel about this can also be raised by this question: "how can a region of nonzero volume be made of points, each of which has volume zero?" Mathematically these two problems are the same. In measure theory we deal with them by saying that measures are countably additive, but not additive for uncountable disjoint unions: so, an uncountable union of points having zero measure can have nonzero measure. In short, there is no real paradox here, even though someone with finitist leanings may find this upsetting, and perhaps even rightfully so.As for events "actually happening", and how this concept doesn't appear in probability theory, I'll just say that the corresponding problem in quantum theory has led to endless discussion, with some people taking the many-worlds view that "everything possible happens in some world", others claiming that's nonsense, and others saying that probabilities should be interpreted in a purely Bayesian way. I don't think this is about the continuum: it's about what we mean by probability.

I'm curious whether the continuum model creates a fundamental problem in applying probability theory to physics.Although applied mathematics often deals with probabilistic problems where events "actually" happen there is no notion of events "actually" happening in the formal mathematical theory of probability. The closest notion to an "actual event" in that theory is the notion of "given" that is employed in the phrase defining conditional probability. However the definition of conditional probability defines a probability, it doesn't define the "given-ness" of an event anymore than the mathematical definition of limit gives a specific meaning to the word "approaches". When we assume a sample has been taken from a normal distribution, we have assumed an event with probability zero has occurred. This brings up familiar discussions about whether events with probability zero are "impossible". The apparent paradox is often resolved by saying human beings cannot take infinitely precise sample values from a normal distribution. Thus our "actual" samples are effectively a sample from some discrete distribution. However, can Nature take infinitely precise samples from a distribution on a continuum?