Differential Equation Systems and Nature

Table of Contents

Abstract

“Mathematics is the native language of nature.” is a phrase that is often used when it comes to explaining why mathematics is all around in natural sciences, especially in physics. What does that mean? A closer look shows us that it primarily means that we describe nature by differential equations, a lot of differential equations. There are so many that it would take an entire encyclopedia to gather all of them in one book. The following article is intended to take the reader through this maze along with examples, many pictures, a little bit of history, and the theorem about the existence and uniqueness of solutions: the theorem of Picard-Lindelöf.

A Glimpse of Our Descriptions of the World

It all began with Isaac Newton in 1687, or if we are picky, with Gottfried Leibniz during his stay in Paris 1672-1676 [34]. The reference to Paris is more than a side note since it will be especially French mathematicians who will develop the field of analysis in the following centuries to the state we know today. Joseph-Louis Lagrange, Pierre-Simon Laplace, Adrien-Marie Legendre, Augustin-Louis Cauchy, and Pierre-Alphonse Laurent are names we take for granted in everyday use. Charles Briot and Jean-Claude Bouquet coined the term of a holomorphic function in 1875 and the family of differential equations

$$

x^my’=f(x,y)

$$

carry their name. Other great contributors have been the Bernoulli family (Jakob, Johann, Daniel), Carl-Friedrich Gauß, George Gabriel Stokes, Marius Sophus Lie, and Amalie Emmy Noether to name a few. They all set milestones in mathematics and paved the way.

Of course, there has been important mathematics before the seventeenth century, as there has been important physics before Newton. Euclid of Alexandria and Archimedes of Syracuse lived in the third century B.C.! But if we look at the main subjects that we need today to describe nature, then we will end up with power series and forces.

Physics

It all began with Isaac Newton in 1687.

$$

F \;\sim\;\ddot{x}

$$

is nowadays the definition of a force. And it is a differential equation! The equation also tells us what happens without a force, namely that there is no change of motion ##\dot{v}## either, i.e. motion is conserved under the absence of forces

$$

F =0\;\Longleftrightarrow \;\ddot{x}=\dot{v}=0.

$$

Emmy Noether [1],[2] showed 231 years later that every conserved physical quantity corresponds to invariants of differential expressions. For example, James Clerk Maxwell introduced in 1864 his equations of electromagnetism which are all differential equations. Electromagnetism is a theory of the corresponding vector fields.

\begin{align*}

\vec{\nabla} \times \vec{H}=\vec{j}+\dot{\vec{D}}\; &, \;

\vec{\nabla} \times \vec{E}=-\dot{\vec{B}}\\

\vec{\nabla} \cdot \vec{D}=\rho\; &, \;

\vec{\nabla} \cdot \vec{B}=0

\end{align*}

Albert Einstein [3] even described the whole cosmos in 1915 by the differential Einstein Field Equations

$$

R_{\mu \nu }-{\frac{1}{2}}g_{\mu \nu }R+\Lambda g_{\mu \nu }={\frac{8\pi G}{c^{4}}}T_{\mu \nu }.

$$

These are outstanding results without any doubt. The example of forces in classical, say non-relativistic physics and Noether’s theorem, however, show that there are more examples to expect.

What the Taylor series is for mathematicians

$$

f(x)=\sum_{n=0}^\infty \dfrac{(x-x_0)^n}{n!}\cdot \left. \dfrac{d^n}{dx^n}\right|_{x=x_0}f(x)

$$

is the harmonic oscillator for physicists

$$

{\ddot{x}}+\omega^{2}\,x=0.

$$

They appear every year during the study of mathematics, resp. physics in new variations and contexts, and both are differential expressions. Balthasar van der Pol found in 1927 while studying vacuum tubes an oscillator with non-linear damping and positive feedback

$$

\ddot{x}-\varepsilon (1-x^{2}){\dot{x}}+x=0

$$

that does not allow a closed solution. Most systems aren’t as nice as waves

$$

\ddot{x}\sim \nabla^2 x

$$

or the heat equation (Jean-Baptiste Joseph Fourier, 1822)

$$

\dot{x}\sim\nabla^2 x.

$$

The holy grail of differential equation systems among the not-so-nice systems are the Navier-Stokes equations

$$

{\displaystyle \overbrace {\underbrace {\frac {\partial \mathbf {u} }{\partial t}} _{\begin{smallmatrix}{\text{Variation}}\end{smallmatrix}}+\underbrace {(\mathbf {u} \cdot \nabla )\mathbf {u} } _{\begin{smallmatrix}{\text{Convection}}\end{smallmatrix}}} ^{\text{Inertia (per volume)}}\overbrace {-\underbrace {\nu \,\nabla ^{2}\mathbf {u} } _{\text{Diffusion}}=\underbrace {-\nabla w} _{\begin{smallmatrix}{\text{Internal}}\\{\text{source}}\end{smallmatrix}}} ^{\text{Divergence of stress}}+\underbrace {\mathbf {g} } _{\begin{smallmatrix}{\text{External}}\\{\text{source}}\end{smallmatrix}}}.

$$

They are named after Claude Louis Marie Henri Navier and George Gabriel Stokes, and they describe the behavior of fluids and gases like water, air, or oil. They are therefore important in the development and design of ships, airplanes, and cars. They can only be solved numerically in their discrete version. There are no exact analytical solutions for these complicated applications. The Navier-Stokes equations are also a mathematical challenge because we do not know whether there is an everywhere-defined, smooth, and unique solution for the general three-dimensional case at all. This is one of the seven-millennium prize problems [4]. The Poincaré conjecture is considered to be solved by Grigori Perelman (2002,2003) so we should probably better speak of the six-millennium prize problems of the Clay Mathematics Institute.

The language of physics, at least the aspect of it with which we are concerned, namely differential geometry, is full of designations for differential expressions. There is of course the simple differentiation that leads to the differential operator

$$

\dfrac{d}{dx}

$$

The point why it carries its own name other than differentiation is the point of view: we transform one function ##x\mapsto f(x)## to another function ##x\mapsto f'(x)## rather than computing slopes. Its multivariate counterpart is the Nabla operator

$$

\vec{\nabla }=\left({\dfrac{\partial }{\partial x_{1}}},\ldots ,{\dfrac{\partial }{\partial x_{n}}}\right)$$

that produces the gradient vector of a function. It leads to the Laplace operator

$$

\Delta f=\nabla \cdot (\nabla f)=(\nabla \cdot \nabla )f=\nabla^{2}f

$$

when applied twice. It deserves its own name since it appears in another designation, the Schrödinger operator

$$

\dfrac{\hbar^{2}}{2m}\Delta +V

$$

and the d’Alembert operator

$$

\Box=\dfrac{1}{c^2}\dfrac{\partial^2}{\partial t^2} -\Delta \,.

$$

Even the Hodge star operator

$$

{}^\ast \colon \Lambda ^{k}(V^{*})\rightarrow \Lambda ^{n-k}(V^{*})

$$

that only transforms vectors in Graßmann algebras, at first sight, is an operator between cotangent spaces at second sight and thus an operator in differential geometry, too. There are also named differential equations or better, differential formalisms that carry specific names like the Lagrangian or Euler-Lagrange equation

$$

\dfrac{d}{dt}\dfrac{\partial L}{\partial \dot{q}}- \dfrac{\partial {L}}{\partial q}=0

$$

The importance of differential expressions in the description of physics is reflected in the language of physics. However, I promised you the description of the world and although we already had the description of the cosmos, and physics is per definition all of nature, let’s have a look into other fields of science.

Other Sciences

We find differential equations even in Chemistry which is by nature a discrete world, e.g. the reaction equation of three substances [5]

$$

\dot{x}(t)=K(A-x)(B-x)(C-x)

$$

This looks admittedly a bit artificial but differential equations have been really modern in 1889. And to be fair, the balance equation in a Bachelor’s paper from 2010 [6] in chemistry is not so much of a difference. My professor for differential systems used to say that the entire world is discrete if you only look closely enough. Nevertheless, he taught us how differential equation systems can be approached and in some cases solved. So differential equation systems found their way even into chemistry.

The situation changes completely as soon as we consider stochastic differential equations, i.e. derivatives of probability density functions ##P(x,t).## This opens up the door for a whole variety of applications, especially in economics. The Fokker-Planck differential equations named after Adriaan Daniël Fokker and Max Planck

$$

\dfrac{\partial }{\partial t}P(x,t)=\underbrace{-\dfrac{\partial }{\partial x}\left[A(x,t)P(x,t)\right]}_{\text{drift}}+\underbrace{\dfrac{1}{2}\dfrac{\partial^2 }{\partial x^2}\left[B(x,t)P(x,t)\right]}_{\text{diffusion / fluctuation}}

$$

are widely used in economics, e.g., in forex trading [7], equilibrium models for insider trading [30], on transition processes of income distributions [8], and more. The related Black-Scholes differential equations

$$

\dfrac{\partial C(x,t)}{\partial t}=-\dfrac{1}{2}\sigma^2x^2 \dfrac{\partial^2 C(x,t)}{\partial x^2}-rx\dfrac{\partial C(x,t)}{\partial x}+rC(x,t)

$$

named after Fischer Black and Myron Samuel Scholes are considered a milestone in option prizing [9]; ##C(x,t)## represents the prize of the derivatives. Another example of differential equations in economics is Kaldor’s growth model [10] from 1957 named after Káldor Miklós

$$

\dot{y} \sim c(y) +\dot{k} – y +k_0

$$

where ##y## is the gross national income, and ##k## the national capital stock. Even car traffic can be modeled by differential equations, the traffic equation, a non-linear hyperbolic wave equation for flow ##f(x,t)## and density ##\varrho(x,t)##

$$

\dfrac{\partial }{\partial t}\varrho(x,t) + \dfrac{\partial }{\partial \varrho}f(\varrho (x,t))\cdot \dfrac{\partial }{\partial x}\varrho(x,t)=0\,.

$$

Of course, economics, macro- as well as microeconomics also use the wide areas of game theory [11], and control-theory [12],[13]. Differential equation systems play a central role in both fields. Let me mention the mean-field game theory as an example. It is the study of strategic decision-making by small interacting agents in large populations.

\begin{align*}

\partial u_t-\nu \Delta u+H(x,m,Du)&=0\\{\partial m_t}-\nu \Delta m-\operatorname{div}(D_{p}H(x,m,Du)m)&=0\\ u(x,T)-G(x,m(T))&=0

\end{align*}

The similar name to mean-field theory in physics is intended.

An often overlooked application of differential equation systems is timber management, on local as well as nationwide levels. The timber industry is almost predestined for differential equations: long-term investigation periods, natural growth with human disturbances, and yield optimization under constraints. D.B. Müller introduced a regional model that he called XYLOIKOS in his dissertation [14] that is based on ##200## years of data. His standard version of the model consists of ##221## coupled non-linear integral and differential equations, ##182## parameter functions, and ##371## single parameters! The corresponding simulation has been realized H.P. Bader and R.Scheidegger in Rocky-Mountain-Basic, 1996 [15].

Considering the complexity of differential equation systems in the regional timber industry, imagine the size of climate models! I recommend that interested readers look for lecture notes on university servers, e.g. [16], and the literature mentioned there. The first general circulation model by Manabe and Wetherald dates back to 1967 [17], and we have been progressing ever since. We can’t even come close to going into climate models here, so I just want to mention Daisyworld – with a blinking eye.

\begin{align*}

\dot{A}_w&=\left(\beta_wA_g – \chi \right)A_w\\

\dot{A}_b&=\left(\beta_bA_g – \chi \right)A_b\\

A_g&=1-A_w-A_b

\end{align*}

James Lovelock and Andrew Watson created a computer simulation in 1983 [18] in order to support the Gaia paradigm which says that the biosphere of a planet, our planet, forms a synergistic and self-regulating, complex system that helps to maintain and perpetuate the conditions for life on a planet. Their hypothetical planet had only two life forms, black and white daisies, and is otherwise similar to Earth [19]. They mainly investigated the size of the areas where those daisies grow and their albedo effect. Both, the Gaia paradigm as well as the simplifications of the model were subject to criticism and we must not forget the time, will say, the increased computer capacity we gained in the meantime.

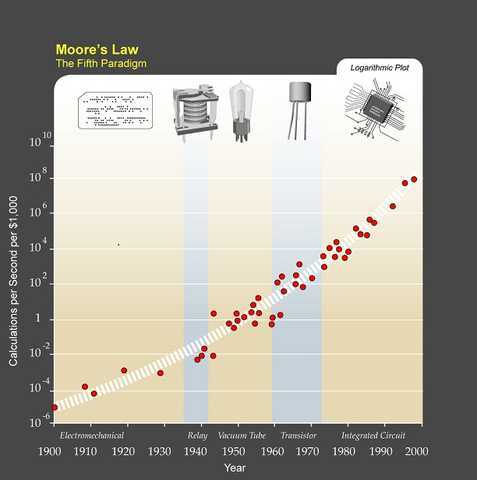

A perfect moment to remember Moore’s law: the number of transistors on microchips doubles every two years [20].

Biology

Any differential expression is a formula about change, often according to a time variable. And biology is the field of natural sciences where all is about change, namely growth.

$$

\dot{x}\sim x

$$

is the simplest and most basic formula of growth. Its solution is

$$

t\longmapsto x(t)=e^{at}

$$

and therefore called exponential growth, cp. [21]. It describes for example the unconstrained growth of bacteria, or Moores’s law. However, nothing in nature is without constraints. We consider thus in a first step the logistic growth

$$

\dot x \sim x\cdot (c-x)

$$

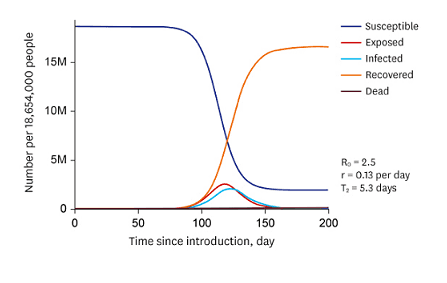

with a capacity limit, e.g. the size of the Petri dish. But other than a bacterium in a Petri dish are bacteria in the wild not independent from another, or other changing parameters. This means, that the better we want to describe reality, the more we have to consider coupled systems with feedback, be it positive (virus infection) or negative (declining resources). The previous examples showed this. One of the more famous classes of differential equation systems in nature are SIR or Kermack-McKendrick-models, especially with Covid in mind [22],[23]

\begin{align*}

\dfrac{\mathrm{d} S}{\mathrm{d} t}&=\nu \,N-\beta \dfrac{S\,I}{N}-\mu \,S\;,\\[6pt]

\dfrac{\mathrm{d} I}{\mathrm{d} t}&=\beta \dfrac{S\,I}{N}-\gamma \,I-\mu \,I\;,\\[6pt]

\dfrac{\mathrm{d} R}{\mathrm{d} t}&=\gamma \,I-\mu \,R\;.\\

\end{align*}

SIR models are intended to describe outbreak, course, and the end of epidemics and pandemics and were introduced by William Ogilvy Kermack and Anderson Gray McKendrick in 1927 [24]. The three quantities under consideration are the number of Susceptible individuals, the number of Infectious individuals, and the number of Removed (and immune) or deceased individuals. The extensions of the model that also take the number of Exposed individuals into account are called SEIR models [25]. The coefficients reflect recovery and infection rates, and general birth and death rates. They do not depend on time, which is a significant simplification compared to real-life pandemics where varying types of a virus occur.

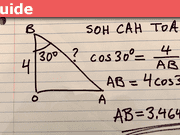

Predator-Prey Model

The differential equations for a system with a predator and a prey population have been set up independently by Alfred James Lotka [26] in 1925 and Vito Volterra [27] a year later. The Lotka-Volterra models work quite well for two or three species. Furthermore, they have the big advantage that we can draw, explain, solve – more or less – and test them. That’s why they show up in every textbook that deals with coupled differential equation systems and consequently, here, too. The basic idea behind the equations is, and this is a well-documented phenomenon in countless biological situations, that a population of predators thrives when prey is there in abundance, which declines the population size of prey, so that predators are forced to have fewer offspring, and the population of prey can recover until the cycle starts again. The two coupled differential equations are

\begin{align*}

\dot{x}(t)&=x(t)\left(\alpha -\beta y(t)\right)\\

\dot{y}(t)&=-y(t)\left(\gamma -\delta x(t)\right)

\end{align*}

where ##t## is the time variable, ##x(t)## the population size of prey, and ##y(t)## the number of predators. The coefficients are the parameters. They must be adjusted to the situation under investigation. ##\alpha >0## is the birth rate of prey on ideal conditions, ##\beta>0## the death rate per predator. ##\gamma >0## is the death rate among predators, and ##\delta ## the reproduction rate of predators per prey. If I remember correctly, then my professor mentioned wolves, mountain hares, and caribous in Nova Scotia as a working example. However, it will be sufficient for demonstration reasons to choose some fantasy values that ensure nice pictures. Hence we will consider the equations

\begin{align*}

\dfrac{dx(t)}{dt}=\dot{x}(t)&=10x(t)-2x(t)y(t)=(10-2y(t))\cdot x(t)\\[6pt]

\dfrac{dy(t)}{dt}=\dot{y}(t)&=-7y(t)+x(t)y(t)=(-7+x(t))\cdot y(t)

\end{align*}

If we eliminate ##dt## and integrate on both sides, then

$$

y(t)^{10}e^{-2y(t)}=k\cdot x(t)^{-7}e^{x(t)}\, , \,k=e^C>0

$$

which transforms to the function

$$

F(x,y)=\dfrac{e^{x}}{x^7}\cdot \dfrac{e^{2y}}{y^{10}} =\dfrac{1}{k}=e^{-C}

$$

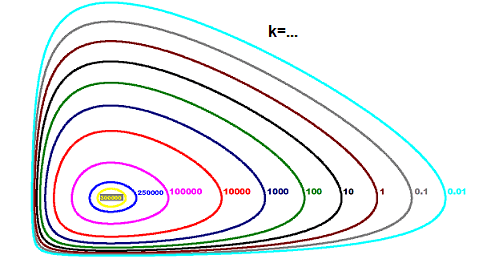

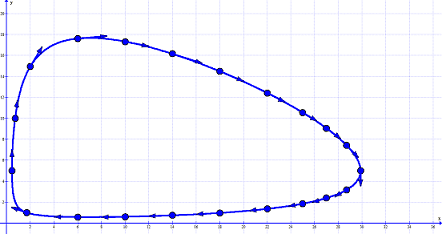

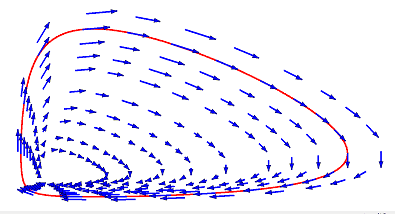

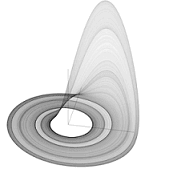

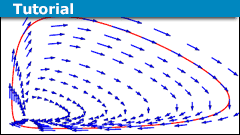

Although we still do not know what ##x(t),y(t)## look like, we already can conclude a few properties of the system. If we vary the constant ##k## and plot the graph of ##F(x,y)##, then we get the phase plot or phase space plot of the Lotka-Volterra system,

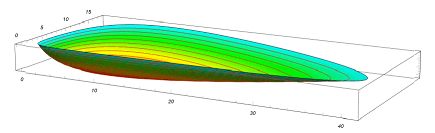

The three-dimensional graph of ##F(x,y)## looks a bit like a bowl [28].

It consists of all possible flows ##F(x,y)=1/k##

through the vector field of the differential equation system

The diagrams show us that

- None of the two populations can become extinct.

- Both population sizes vary periodically by time since the flows are closed curves. We already expected this from the informal description.

- Which flow of all possible ones is the actual one depends only on the initial values of the system, e.g. the red line through the vector field.

- There must be a critical point at some large value of ##k.##

\begin{align*}

F_x=\dfrac{\partial F}{\partial x}&=\left(\dfrac{1\cdot e^x}{x^7}-7\dfrac{e^x}{x^8}\right)\cdot \dfrac{e^{2y}}{y^{10}}=\dfrac{(1\cdot x- 7)e^x}{x^8}\cdot \dfrac{e^{2y}}{y^{10}}\\

F_y=\dfrac{\partial F}{\partial y}&=\left(\dfrac{2\cdot e^{2y}}{y^{10}}-10\dfrac{e^{2y}}{y^{11}}\right)\cdot \dfrac{e^{x}}{x^{7}}=\dfrac{(2\cdot y- 10)e^{2y}}{y^{11}}\cdot \dfrac{e^{x}}{x^{7}}\\[6pt]

F_x=0 &\Longleftrightarrow x_0=\dfrac{\gamma }{\delta }=7 \\

F_y=0 &\Longleftrightarrow y_0=\dfrac{\alpha}{\beta}=5

\end{align*}

We have therefore a critical point at ##(7,5)## which is a local minimum. It corresponds to ##k\approx 332,951## and ##C\approx 12.7.## - Assume that ##T## is the common period of both populations. Then

\begin{align*}

0&=\log x(T) -\log x(0)=\int_0^T \dfrac{d}{dt}(\log x(t))\,dt=\int_0^T (\alpha – \beta \cdot y(t))\,dt\\&=\alpha T-\beta\int_0^Ty(t)\,dt =10-2\int_0^Ty(t)\,dt

\end{align*}

and the mean population size of predators is

$$

MV(y) = \dfrac{1}{T}\int_0^T y(t)\,dt = \dfrac{\alpha}{\beta}=5=y_0

$$

and analog for the mean population size of prey

$$

MV(x) = \dfrac{1}{T}\int_0^T x(t)\,dt = \dfrac{\gamma }{\delta }=7=x_0

$$

The mean population sizes of predator and prey are only dependent on the critical point. They are especially independent of the initial values of the system, i.e. equal for any specific solution aka flow. - The periodic population sizes are irregular in the sense that we only get pure sines and cosines if the flows are elliptic or a circle.

\end{enumerate}

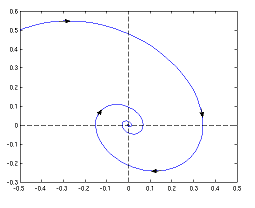

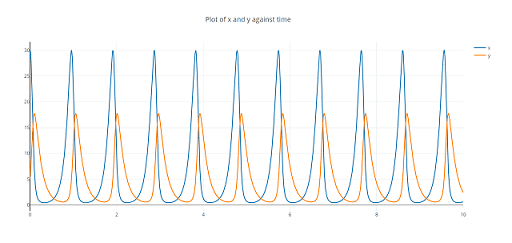

We have thus learned a lot about the situation without actually solving it. This can be done numerically by linearization, i.e. working with small differences instead of differentials. The result [29] in our case is by the Runge-Kutta-Fehlberg method

Attractors, Repellers, and Strange Attractors

We have seen that vector fields, like ##(F_x,F_y)## above, are good possibilities for analyzing differential equation systems. We can draw them relatively easily as linear, i.e. first-order approximations and they tell us the course of flows, i.e. solutions. Two phenomena are especially of interest: attractors and repellers.  An attractor is a region where a flow cannot escape from once it is within, ##F(t,A)\subseteq A.## If an attractor is a point [31], then flows converge to such a point.

An attractor is a region where a flow cannot escape from once it is within, ##F(t,A)\subseteq A.## If an attractor is a point [31], then flows converge to such a point.

A repeller is a negative attractor, a region or point where flows run away from. Switching the directions of the vectors that form an attractor generates a repeller. A magnetic field with its field lines is a good imagination of what attractors and repellers are. Of course, complex vector fields can have both of them and more than one, too. A charged particle is attracted from one side of a dipole and repelled from the other.

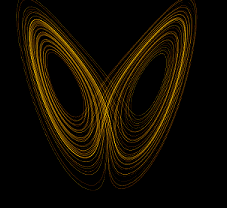

Edward Norton Lorentz, a US-American meteorologist, and mathematician investigated an idealistic hydrodynamic system to predict states of the atmosphere and found 1963 a strange-looking attractor [32] of

\begin{align*}

\dot X&=a(Y-X)\\

\dot Y&=X(b-Z)-Y\\

\dot Z&=XY-cZ\,.

\end{align*}

We therefore speak of strange attractors and the one above is the Lorenz attractor. Other famous, and strange attractors are the (Otto) Rössler attractor (1976) [33]

\begin{align*}

\dot X&=-Y-Z\\

\dot Y&=X+aY\\

\dot Z&=b+XZ-cZ\,.

\end{align*}

or the (Michel) Hénon attractor for the discrete system

$$

x_{k+1}=y_{k}+1-ax_{k}^{2}\; , \;y_{k+1}=bx_{k}\,.

$$

Theorem of Picard-Lindelöf

We consider a function (flow) on a compact (time) interval ##I\subseteq \mathbb{R}##

$$

u\, : \,[a,b] \longmapsto \mathbb{R}^n

$$

and the initial value problem (IVP)

\begin{align*}

\dot{u}(t)=f(t,u(t))\; , \;u(a)=y_0 \qquad ({}^*)

\end{align*}

for a function ##f\, : \,[a,b]\times \mathbb{R}^n\longmapsto \mathbb{R}^n## and ask for solutions. The (global version) of the theorem of Picard-Lindelöf (1890) says, that if ##f## is continuous, and globally Lipschitz-continuous in the second variable, then there exists a unique differentiable solution ##\overline{u}## of the IVP ##({}^*)## for every ##y_0\in \mathbb{R}^n,## and the sequence

$$

v_{k+1}:=y_0 +\int_a^t f(s,v_k(s))\,ds\qquad\qquad t\in [a,b]\, , \,k\in \mathbb{N}_0

$$

converges uniformly to ##\overline{u}.##

The idea of the proof is to consider the fixed point equation

$$

u(t)=T.u(t)=u_0+\int_a^t f(s,u(s))\,ds

$$

and prove with the help of the Lipschitz continuity that for the integral operator ##T## holds

$$

\|T.u-T.v\|_\infty \leq L\cdot (b-a)\cdot \|u-v\|_\infty \,,

$$

change to a weighted norm

$$

\|u\|\leq \|u\|_\infty \leq \|u\|e^{\alpha (b-a)}\,,

$$

then prove that we get pointwise

$$

\left|T.u(t)-T.v(t)\right|\leq \dfrac{b-a}{\alpha} \cdot \|u-v\|\cdot e^{\alpha |t-a|}\,,

$$

which means for a suitable choice of ##\alpha## that

$$

\|T.u-T.v\|\leq \dfrac{b-a}{\alpha} \cdot \|u-v\| <\|u-v\|\,.

$$

This means that ##T## is a contraction and the result follows by the Banach fixed-point theorem.##\qquad\;\;\;\;\Box##

It is obvious that the global Lipschitz condition in the second variable, i.e. the flow and solution curve is essential for the theorem. Although the theorem is still applicable to large classes of problems, e.g. the linear case for continuous functions

\begin{align*}

\dot{u}&=A(t)\cdot u + r(t)\\[6pt]

|f(t,u)-f(t,v)|&\leq\displaystyle{\sup_{t\in[a,b]}}|A(t)| \cdot|u-v|\,,

\end{align*}

it is at the same time its greatest weakness. We can prove a local version of the theorem or similar local statements like the theorem of Peano with different conditions, but we cannot eliminate the basic problem to keep the possible flows under control without assuming appropriate conditions beforehand. This is in my opinion system immanent and one of the reasons why Navier-Stokes has made it a Millenium Prize Problem.

We still do not have a satisfactory answer as to whether there are undoubtedly hard problems in this world or whether we simply failed to find smart solutions.

Sources

Sources

[1] A.E. Noether, Nachrichten der Königlichen Gesellschaft der Wissenschaften zu Göttingen, 1918, Invarianten beliebiger Differentialausdrücke, pages 37-44.

[2] A.E. Noether, Nachrichten der Königlichen Gesellschaft der Wissenschaften zu Göttingen, 1918, Invariante Variationsprobleme, pages 235-257.

[3] A. Einstein, Sitzung der physikalisch-mathematischen Klasse der preussischen Akademie der Wissenschaften vom 25. November 1915, Berlin, Die Feldgleichungen der Gravitation, pages 844-847.

[4] Millennium Prize Problems.

https://en.wikipedia.org/wiki/Millennium_Prize_Problem

[5] A. Fuhrmann, Über die Differentialgleichung chemischer Vorgänge dritter Ordnung, Zeitschrift für Physikalische Chemie 1889, Dresden.

https://www.degruyter.com/document/doi/10.1515/zpch-1889-frontmatter4/html

[6] Ch. Shilli, Reduktion biochemischer Differentialgleichungen, Aachen, 2010.

http://www.math.rwth-aachen.de/~Christian.Schilli/BAred.pdf

[7] J. Bruderhausen, Zahlungsbilanzkrisen bei begrenzter Devisenmarkteffizienz, Peter Lang Verlag, Hamburg, 2003.

https://library.oapen.org/bitstream/handle/20.500.12657/42205/1/9783631750124.pdf

[8] O. Posch, Hamburg, 2022, Transition probabilities and the limiting distribution of income.

[9] J. Lemm, lecture notes of Econophysics, Münster, WS 1999/2000.

https://www.uni-muenster.de/Physik.TP/~lemm/econoWS99/options2/node12.html

[10] F. Hahn, Stochastik und Nichtlinearität in der Modellökonomie – demonstriert am Kaldorschen Konjunkturmodell, Austrian Institute of Economic Research, Wien, 1985.

https://www.wifo.ac.at/bibliothek/archiv/Wifo_WP/WP_11.pdf

[11] P. Blanc, J.D. Rossi, Game Theory and Partial Differential Equations, de Gruyter, Buenos Aires, 2019.

https://www.degruyter.com/document/doi/10.1515/9783110621792/html?lang=de

[12] M. Pokojovy, Kontrolltheorie für zeitabhängige partielle Differentialgleichungen, lecture notes, Konstanz, SS 2014.

[13] M. Voigt, Mathematical Systems and Control Theory, lecture notes, Hamburg, WS 2019/2020.

https://www.math.uni-hamburg.de/personen/voigt/Regelungstheorie_WiSe19/Notes_ControlTheory.pdf

[14] D.B. Müller, Modellierung, Simulation und Bewertung des regionalen Holzhaushaltes, Dissertation ETH Zürich, 1998.

https://www.research-collection.ethz.ch/mapping/eserv/eth:22924/eth-22924-02.pdf

[15] H.P. Bader, R. Scheidegger, Regionaler Stoffhaushalt – Erfassung, Bewertung und Steuerung, P. Baccini, H.P. Bader., Springer, Heidelberg, 1996.

[16] T.Stocker, Einführung in die Klimamodellierung, lecture notes, Bern, WS 2002/2003.

https://paleodyn.uni-bremen.de/gl/geo_html/lehre/ThomasStockerskript0203.pdf

[17] S. Manabe, R.T. Wetherald, Thermal Equilibrium of the Atmosphere with a Given Distribution of Relative Humidity, Journal of Atmospheric Sciences, 1967, pages 241-259.

https://journals.ametsoc.org/view/journals/atsc/24/3/1520-0469_1967_024_0241_teotaw_2_0_co_2.xml

[18] Modeling Daisyworld.

https://personal.ems.psu.edu/~dmb53/DaveSTELLA/Daisyworld/daisyworld_model.htm

[19] A.J. Watson, J.E. Lovelock, Biological homeostasis of the global environment: the parable of Daisyworld, Tellus, vol. 35/B (1983), pages 284–289.

https://courses.seas.harvard.edu/climate/eli/Courses/EPS281r/Sources/Gaia/Watson-Lovelock-1983.pdf

[20] Image source for Moore’s Law.

https://en.wikipedia.org/wiki/Moore%27s_law

[21] The Amazing Relationship Between Integration And Euler’s Number.

[22] I. Cooper, A. Mondal, C.G. Antonopoulos, A SIR model assumption for the spread of COVID-19 in different communities. Chaos Solitons Fractals. 2020 Oct;139:110057. doi: 10.1016/j.chaos.2020.110057. Epub 2020 Jun 28. PMID: 32834610; PMCID: PMC7321055.

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7321055/

[23] K.M. Haradhan, Mathematical Analysis of SIR Model for COVID-19 Transmission, Journal of Innovations in Medical Research, Chittagong, 09/2022, vol.1 no.2.

https://mpra.ub.uni-muenchen.de/114390/1/MPRA_paper_114390.pdf

[24] Kermack, McKendrick, A contribution to the mathematical theory of epidemics. Proc. Roy. Soc. A, vol. 115, 1927, pages 700–721.

[25] Image Source for Covid-19.

https://jkms.org/DOIx.php?id=10.3346/jkms.2020.35.e227

[26] A.J. Lotka, Elements of Physical Biology, Williams and Wilkins Company, Baltimore, 1925.

https://archive.org/details/elementsofphysic017171mbp/page/n1/mode/2up

[27] V. Volterra, Variazioni e fluttuazioni del numero d’individui in specie animali conviventi. In: Mem. R. Accad. Naz. dei Lincei. Ser. VI, vol. 2, 31-113.

[28] Image Source for Lotka-Volterra phase space Plot.

http://www.mi.uni-koeln.de/Vorlesung_Sweers/GDGL1819/Skript/Week09.pdf

[29] Image Source for Lotka-Volterra Linearization Plot.

https://fusion809.github.io/LotkaVolterra/

[30] J. Voelzke, Zeitstetige Gleichgewichtsmodelle zum Insiderhandel, Master thesis, Münster, 2013.

https://www.uni-muenster.de/Stochastik/paulsen/Abschlussarbeiten/Masterarbeiten/Voelzke.pdf

[31] Image source for a simple point Attractor.

http://peter-junglas.de/fh/vorlesungen/physik2/html/kap6-3-2.html

[32] Image source for the Lorenz attractor.

https://de.wikipedia.org/wiki/Lorenz-Attraktor

[33] Image source for the Rössler attractor.

https://de.wikipedia.org/wiki/Seltsamer_Attraktor

[34] S. Subramanya Sastry, The Newton-Leibniz controversy over the invention of the calculus

Thank you, I added the reference and a reference to the reference. You were right, it wasn't specifically important, but – to be honest – I am a fan of my list of sources and every additional source that is meaningful is highly welcome. Those articles are overviews by their nature, so the list of sources is not only a good scientific habit people should get used to early, but also helpful in the rare case that someone is inspired by such an article and wants to dig deeper. I can say for myself that my juvenile curiosity in STEM fields (and the history of them) was mainly triggered by reading books and articles like the ones we have in the insight department and I was always looking for more.

https://pages.cs.wisc.edu/~sastry/hs323/calculus.pdf

… and being inquisitive is literally what scientists do.

No. Wrong continent and IIRC also wrong language, however, yes, along these lines. Seems timber management and forestation is a much better example for differential equations than Lotka-Volterra. But a horror to draw, I guess. The paper I quoted in the article was a dissertation in Switzerland based on 200 years of data! I just checked, and CC was apparently not of as much interest in 1998 as it is today. At least the word didn't appear in the thesis.

Btw., it is the second time I repaired the first post. Do you know whether it is recreated when I update the article? I corrected a typo.

https://www.researchgate.net/public…al_Equations_in_Sustainable_Forest_Harvesting

https://www.sciencedirect.com/science/article/abs/pii/0377221786902122

I remembered that I had read an article about timber management in some Asian country, Indonesia, Vietnam, or somewhere there. Unfortunately, I don't remember the country so I couldn't find it again. Instead, I found a dissertation about regional timber management and I was totally amazed and intrigued by the sheer size of the system: 221 coupled non-linear integral and differential equations, 182 parameter functions, and 371 single parameters! Just wow!

I was also amazed by the beauty and simplicity of ##F\sim \ddot x## and what it already implicates without any other equation.

I'm also amazed at how well math describes the universe we observe. You article is great in its survey of differential equations. I recall taking a couple of courses, always amazed at the solution strategies used. Some made sense with deeper understanding of Calculus but others just mystified me.

I like the insight format. You can tell in a couple of pages the basic ideas and facts without having to write a novel. There is an (?) insight article I wrote because some kids have asked me about an overview of "differentiation". It resulted in five parts!

https://www.physicsforums.com/insights/the-pantheon-of-derivatives-i/

And it is still only an overview. I like to quote the 10-point list here:

https://www.physicsforums.com/insights/journey-manifold-su2mathbbc-part/

where I gathered some perspectives on a derivative. And I didn't even use the word slope.

I uploaded the 500 pages of my solutions to the math challenge problems here and I think there are a lot of typos and certainly quite some mistakes in it. This would be rather boring to proofread if it were a book. And the title "Differential Equations and Nature" could easily be a book, but were to stop? I have a book (~1000 pages) from Jean Dieudonné about the history of mathematics between 1700 and 1900 (roughly). I like it very much and appreciate the work he has done to write it. I wouldn't have had the patience.

If I ever write a book it would be: "Is it hard or are we stupid?" I'm fascinated by the fact that we cannot decide NP<>P, and that there seems to be a gap between dimensions 2 (easy) and 3 (impossible), e.g. the complexity of matrix multiplication. We can determine the rank of a matrix (1-1-tensor) in linear time but fail to do the same for bilinear functions (2-1-tensors). Why? Fermat's theorem for n=3 had been solved early, arbitrary n took 350 years. Nevertheless, we still do not have a proof that NP is difficult. Same with Navier-Stokes. Is it hard or are we stupid? And if it is hard, why can't we prove it is hard?

Thanks for sharing.

Have you thought of writing a popular or college math book?

No, sorry. There is only one proper 3D picture which I took from a lecture note. The pictures I made myself used graph

View attachment 331128

and mspaint, and the linearization of the predator-prey model used the Lotka-Volterra calculator that can be found in

https://www.physicsforums.com/threa…h-physics-earth-and-other-curiosities.970262/

I tried to use a German math graphic program but I got stuck, and even WA didn't produce nice pictures, so I returned to my "keep it simple" approach. I calculated the vectors for one flow and added them into the program as arrows, made a screenshot, and copied it into mspaint. Then I zoomed out, made another screenshot, and so on.

All pictures that I did not produce myself, e.g. the graphic about Moore's law, are referenced in the chapter "Sources".

I especially like the fact that you've included the D'Alembertian operator. I remember first seeing it in college and then again in a sci-fi movie (don't remember the name) and thought wow this movie got a real physicist or mathematician assisting the production. I think they squared it too ala the Laplacian.

The Navier/Stokes term labeling was pretty cool too. A theme of the Gifted movie was a side thesis around the Navier Stokes millenium prize.

What software did you use to generate the 3D plots? Matlab / Julia / Python plots?

Jedi